You gotta start somewhere… We all know that you need to start somewhere, but where should we start?

This is a question that I had when choosing the database instance class for a POC in AWS. I needed to start somewhere. I did not want to spent too much money, although I do pay by hour…

AWS teaches you to be a little more efficient with things like IO, because you are paying for it and you are limited in about 10 different ways.

Look at the above graph, 60MB/s every day of the week – all large reads.

Database recording throughput at 60000 IOPs, but wait – I paid for 3000.

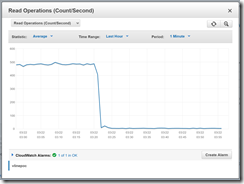

AWS is telling me that I’m only using 500 IOP’s but this is a hard celling. This is limiting my performance… How do i know?

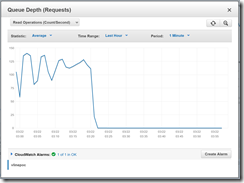

This is how, look at my queue depth

The above is a little too “choppy” for my liking too, so I need to smooth this out.

Across my architecture I don’t see too much activity at all… I do not see enough… Classic bottleneck

So, I do a deep dive before I ask AWS to raise my IOPS.

| DB Instance Classes Optimized for Provisioned IOPS | Dedicated EBS Throughput (Mbps) | Maximum 16k IOPS Rate** | Max Bandwidth (MB/s)** |

| db.m1.large | 500 Mbps | 4000 | 62.5 |

| db.m1.xlarge | 1000 Mbps | 8000 | 125 |

| db.m2.2xlarge | 500 Mbps | 4000 | 62.5 |

| db.m2.4xlarge | 1000 Mbps | 8000 | 125 |

| db.m3.xlarge | 500 Mbps | 4000 | 62.5 |

You gotta start somewhere – remember, I started at the bottom line above… Look at the Max Bandwidth# – that is ME!! In the addition of reads and writes, I’m hitting this pretty hard.

So I need to up my database server class to something with some more IO – in terms of MB/s – even to get close to 3000 IOPs. REMEMBER THIS!! This is an important equation if you are looking at RDS. Sure, you might think that 3000 IOPs is good when provisioning your server – but if you are limited by this bandwith, you are high and dry. (Note that with an 8K page, oracle is doing large reads… We are getting 500 IOPs and 60MB, so it seems that oracle is doing 120K reads??) this seems strange. although I don’t doubt the MB/s

I need a class above!

| db.m3.2xlarge | 1000 Mbps | 8000 | 125 |

| db.m3.xlarge | $1.680 |

| db.m3.2xlarge | $3.360 |

Double memory, double cores and double disk throughput

Click a few buttons – and viola! We are modifying the database to a new class instance

Note that you do lose the database! this was not made clear with the wizard…

| time (utc+11) | event |

| Mar 22 3:04 PM | DB instance restarted |

| Mar 22 3:04 PM | Multi-AZ instance failover completed |

| Mar 22 3:03 PM | Multi-AZ instance failover started |

| Mar 22 2:53 PM | Applying modification to database instance class |

| Mar 22 5:07 AM | Finished DB Instance backup |

| Mar 22 5:03 AM | Backing up DB instance |

I had a SQL plus session open, and I got the following errors mid way

Error starting at line : 12 in command -

select count(1) from proddta.f0911

Error at Command Line : 12 Column : 1

Error report -

SQL Error: No more data to read from socket

Error starting at line : 13 in command -

select count(1) from proddta.F4801

Error at Command Line : 13 Column : 1

Error report -

SQL Error: No more data to read from socket

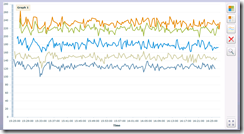

Now look!!! The statistics are vastly different. The database now has enough memory for SGA, so the physical writes are actually significantly lower – because they do not need to be physical anymore.

All fixed with a different class of DB server.

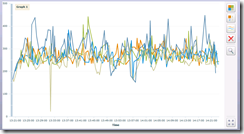

And also, here is the default performance graphs of a system that is hitting some serious ceilings…

Above is the before performance… All scripts running about the same time 250 seconds (give or take)

Here is the exact same payload with a proper sized DB server, the difference is AMAZING! All scripts lower than 250 seconds on average.

No comments:

Post a Comment