I know, you want to starting testing AIS – how cool would that be, but you do not have the right release of JD Edwards. No problems!

sign up here: https://e92demo.myriad-it.com/

Registration is immediate

The you can use this to create an AIS token with:

http://e92demo.myriad-it.com/ais/jderest/defaultconfig

Now that you can see you can connect to the 9.2 AIS server – you can get a token:

URL:

http://e92demo.myriad-it.com/ais/jderest/tokenrequest

header:

Content-Type: application/json

body:

{

"deviceName":"MyDevice",

"username":"SMOMAN",

"password":"XXXE2"

}

returns:

{"username":"SMOMAN","environment":"MYDEMO","role":"*ALL","jasserver":"http://E1WEBV3:9203","userInfo":{"token":"044JEAxluwIRZ6N3gXo/EernNMbxcCRhUxZZnfFiOE7bGY=MDE5MDA4OTExNzQxMDc0NDY3NDc4NTMxM015RGV2aWNlMTUxMzI0NDkxNjg1Mg==","langPref":" ","locale":"en","dateFormat":"DMY","dateSeperator":"/","simpleDateFormat":"dd/MM/yy","decimalFormat":".","addressNumber":1007,"alphaName":"SYED ALTHAF GAFFAR","appsRelease":"E920","country":" ","username":"SMOMAN"},"userAuthorized":false,"version":null,"poStringJSON":null,"altPoStringJSON":null,"aisSessionCookie":"0BFUbPx3aPdeAQ7kLfKkFhZX6O1SNHjdmxXA6F534I9YWjRqdtDz!-1131900178!1513244916855","adminAuthorized":false,"deprecated":true }

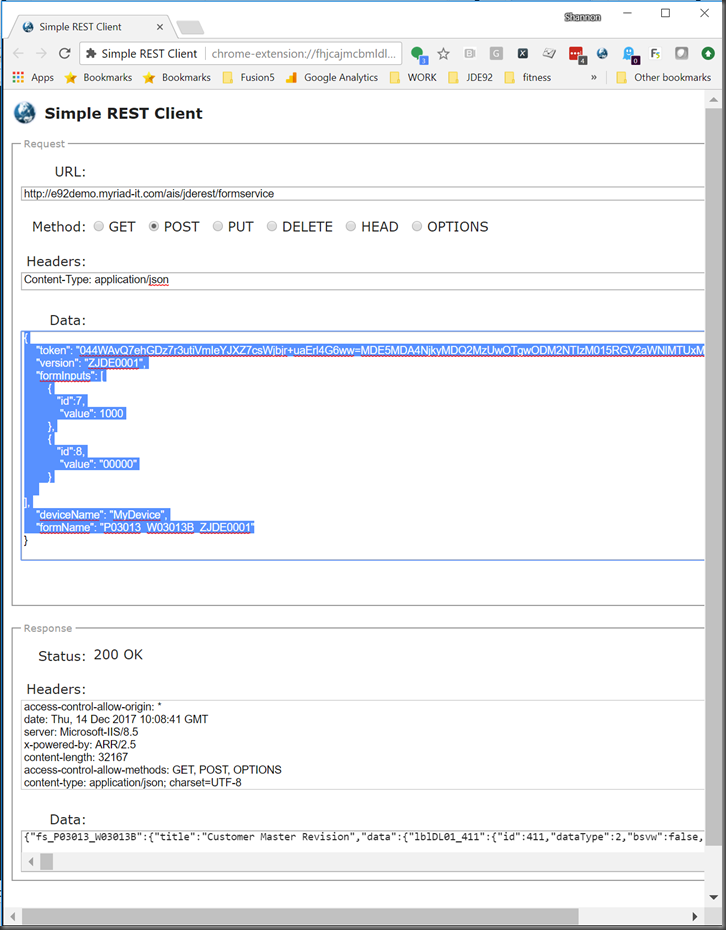

Cool, so we have a login token. Note that I’m using a chrome plugin called “Simple REST Client”. There are a heap out there. Also see that I needed to strictly put a header in for content-type for JSON. The older tools releases did not need this, but now the latest tools release does.

So, what next? Let’s look at a popular application.

Login to your demo URL

http://e92demo.myriad-it.com/jde/E1Menu.maf

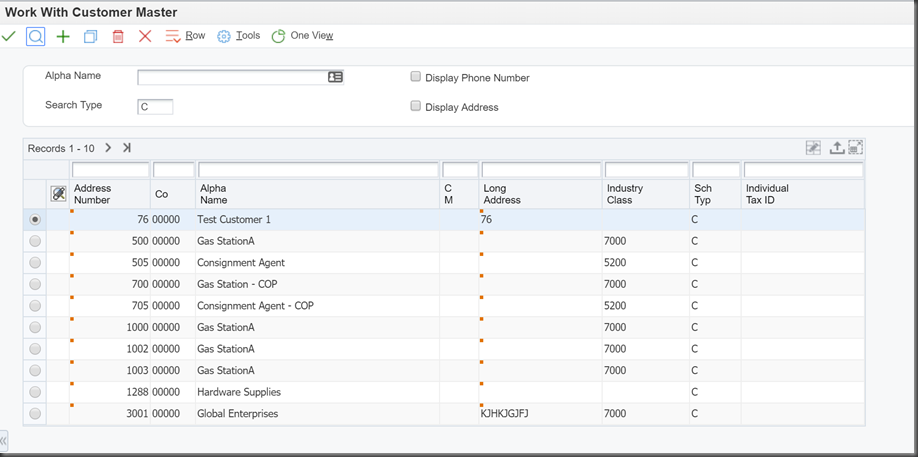

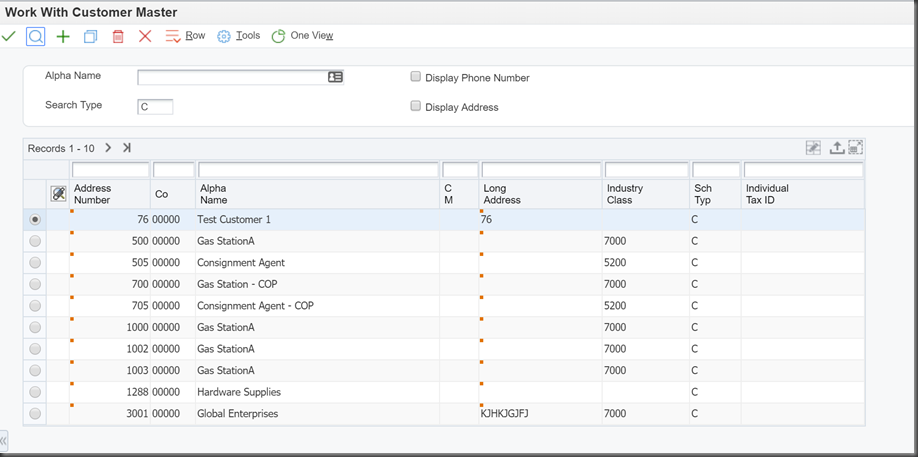

And go to your favourite application – P03013 – work with customer master

Choose a customer

Gas Station A – cool

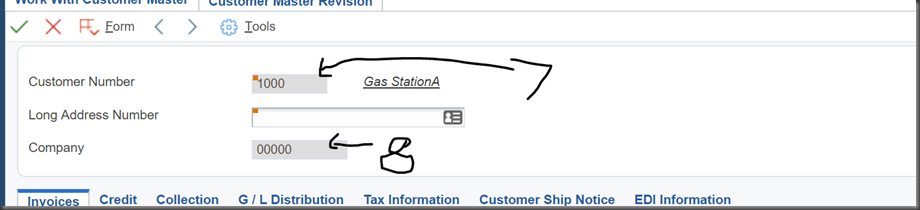

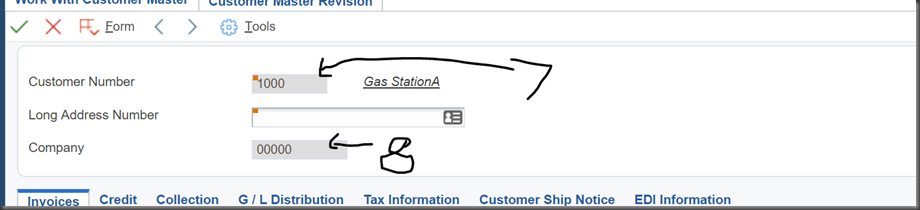

What do we do now? I generally do two things. First tools –> collaborate –> parameterised URL

which gives you

So, we can see that we are passing in for item 7 (AN8), 1000, item 8 (company) 00000, item 11 1

That’s pretty easy

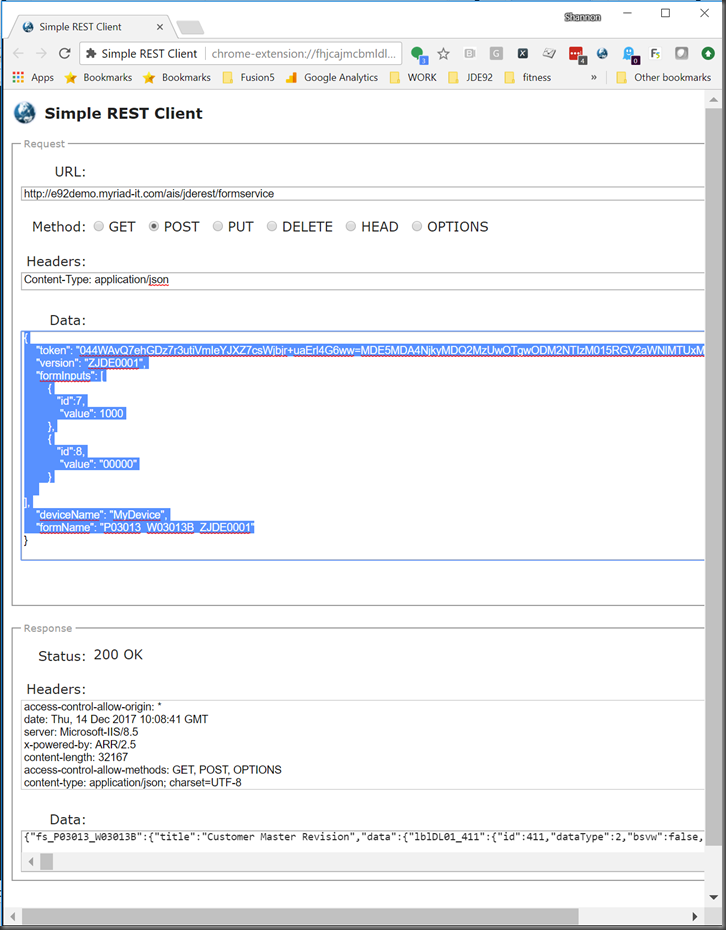

So let’s use our token and our skills to show us some data (and perhaps change some data).

http://e92demo.myriad-it.com/ais/jderest/formservice

Content-Type: application/json

{

"token": "044JEAxluwIRZ6N3gXo/EernNMbxcCRhUxZZnfFiOE7bGY=MDE5MDA4OTExNzQxMDc0NDY3NDc4NTMxM015RGV2aWNlMTUxMzI0NDkxNjg1Mg==",

"version": "ZJDE0001",

"formInputs": [

{

"id":7,

"value": 1000

},

{

"id":8,

"value": "00000"

}

],

"deviceName": "MyDevice",

"formName": "P03013_W03013B_ZJDE0001"

}

Wow, that is a LOT of JSON

{"fs_P03013_W03013B":{"title":"Customer Master Revision","data":{"lblDL01_411":{"id":411,"dataType":2,"bsvw":false,"title":"Blank -Cust. Type ID 40/CI","visible":true,"longName":"lblDL01_411","editable":false,"value":"Blank -Cust. Type ID 40/CI"},"lblDeductionManager_419":{"id":419,"dataType":2,"bsvw":false,"title":"Deduction Manager","visible":true,"longName":"lblDeductionManager_419","editable":false,"value":"Deduction Manager"},"txtDeductionManagers_420":{"id":420,"internalValue":" ","dataType":2,"bsvw":false,"title":"Deduction Managers","visible":true,"longName":"txtDeductionManagers_420","assocDesc":"","editable":true,"value":"\" \""},"lblDefault_300":{"id":300,"dataType":2,"bsvw":false,"title":"Default","visible":true,"longName":"lblDefault_300","editable":false,"value":"Default"},"radNone_422":{"id":422,"internalValue":"0","dataType":1,"bsvw":true,"title":"None","visible":true,"longName":"radNone_422","editable":true,"value":"0"},"txtDefaultIdentificationCodeQualifier_301":{"id":301,"internalValue":" ","dataType":2,"bsvw":true,"title":"Default","staticText":"Default","visible":true,"longName":"txtDefaultIdentificationCodeQualifier_301","assocDesc":".","editable":true,"value":""},"radPickPack_423":{"id":423,"internalValue":"0","dataType":1,"bsvw":true,"title":"Pick Pack","visible":true,"longName":"radPickPack_423","editable":true,"value":"0"},"lblDefault_302":{"id":302,"dataType":2,"bsvw":false,"title":"Default","visible":true,"longName":"lblDefault_302","editable":false,"value":"Default"},"radStandardCarton_424":{"id":424,"internalValue":"0","dataType":1,"bsvw":true,"title":"Standard Carton","visible":true,"longName":"radStandardCarton_424","editable":true,"value":"0"},"txtDefaultReferenceNumberQualifier1_303":{"id":303,"internalValue":" ","dataType":2,"bsvw":true,"title":"Default","staticText":"Default","visible":true,"longName":"txtDefaultReferenceNumberQualifier1_303","assocDesc":".","editable":true,"value":""},"chkPackagingCode_306":{"id":306,"internalValue":" ","dataType":1,"bsvw":true,"title":"Packaging Code","visible":true,"longName":"chkPackagingCode_306","editable":true,"value":"off"},"chkTransportationMethod_307":{"id":307,"internalValue":" ","dataType":1,"bsvw":true,"title":"Transportation Method","visible":true,"longName":"chkTransportationMethod_307","editable":true,"value":"off"},"chkRequiresEquipment_308":{"id":308,"internalValue":" ","dataType":1,"bsvw":true,"title":"Requires Equipment","visible":true,"longName":"chkRequiresEquipment_308","editable":true,"value":"off"},"lblDL01_429":{"id":429,"dataType":2,"bsvw":false,"title":"Default - Item Type ID 40/II","visible":true,"longName":"lblDL01_429","editable":false,"value":"Default - Item Type ID 40/II"},"chkIdentificationCode1_309":{"id":309,"internalValue":" ","dataType":1,"bsvw":true,"title":"Identification Code 1","visible":true,"longName":"chkIdentificationCode1_309","editable":true,"value":"off"},"lblTaxExplCode_197":{"id":197,"dataType":2,"bsvw":false,"title":"Tax Expl Code","visible":true,"longName":"lblTaxExplCode_197","editable":false,"value":"Tax Expl Code"},"txtTaxExplCode_198":{"id":198,"internalValue":" ","dataType":2,"bsvw":true,"title":"Tax Expl Code","visible":true,"longName":"txtTaxExplCode_198","assocDesc":".","editable":true,"value":""},"lblTaxRateArea_199":{"id":199,"dataType":2,"bsvw":false,"title":"Tax Rate/Area","visible":true,"longName":"lblTaxRateArea_199","editable":false,"value":"Tax Rate/Area"},"lblBatchProcessingMode_400":{"id":400,"dataType":1,"bsvw":false,"title":"Batch Processing Mode","visible":true,"longName":"lblBatchProcessingMode_400","editable":false,"value":"Batch Processing Mode"},"txtBatchProcessingMode_401":{"id":401,"internalValue":"I","dataType":1,"bsvw":true,"title":"Batch Processing Mode","visible":true,"longName":"txtBatchProcessingMode_401","assocDesc":"Inhibited From Processing","editable":true,"value":"I"},"lblCustomerTypeIdentifier_402":{"id":402,"dataType":1,"bsvw":false,"title":"Customer Type Identifier","visible":true,"longName":"lblCustomerTypeIdentifier_402","editable":false,"value":"Customer Type Identifier"},"txtCustomerTypeIdentifier_403":{"id":403,"internalValue":" ","dataType":1,"bsvw":true,"title":"Customer Type Identifier","visible":true,"longName":"txtCustomerTypeIdentifier_403","assocDesc":"Blank -Cust. Type ID 40/CI","editable":true,"value":""},"lblItemTypeIdentifier_404":{"id":404,"dataType":1,"bsvw":false,"title":"Item Type Identifier","visible":true,"longName":"lblItemTypeIdentifier_404","editable":false,"value":"Item Type Identifier"},"txtItemTypeIdentifier_405":{"id":405,"internalValue":" ","dataType":1,"bsvw":true,"title":"Item Type Identifier","visible":true,"longName":"txtItemTypeIdentifier_405","assocDesc":"Default - Item Type ID 40/II","editable":true,"value":""},"lblAmountDecimals_406":{"id":406,"dataType":9,"bsvw":false,"title":"Amount Decimals","visible":true,"longName":"lblAmountDecimals_406","editable":false,"value":"Amount Decimals"},"txtAmountDecimals_407":{"id":407,"internalValue":0,"dataType":9,"bsvw":true,"title":"Amount Decimals","staticText":"Amount Decimals","visible":true,"longName":"txtAmountDecimals_407","assocDesc":"","editable":true,"value":"0"},"lblQuantityDecimals_408":{"id":408,"dataType":9,"bsvw":false,"title":"Quantity Decimals","visible":true,"longName":"lblQuantityDecimals_408","editable":false,"value":"Quantity Decimals"},"txtQuantityDecimals_409":{"id":409,"internalValue":0,"dataType":9,"bsvw":true,"title":"Quantity Decimals","staticText":"Quantity Decimals","visible":true,"longName":"txtQuantityDecimals_409","assocDesc":"","editable":true,"value":"0"},"lblCompany_19":{"id":19,"dataType":2,"bsvw":false,"title":"Company","visible":true,"longName":"lblCompany_19","editable":false,"value":"Company"},"txtLongAddressNumber_16":{"id":16,"internalValue":" ","dataType":2,"bsvw":false,"title":"Long Address Number","staticText":"Long Address Number","visible":true,"longName":"txtLongAddressNumber_16","editable":true,"value":""},"lblLongAddressNumber_15":{"id":15,"dataType":2,"bsvw":false,"title":"Long Address Number","visible":true,"longName":"lblLongAddressNumber_15","editable":false,"value":"Long Address Number"},"txtAddressNumber_14":{"id":14,"internalValue":1000,"dataType":9,"bsvw":true,"title":"Customer Number","staticText":"Customer Number","visible":true,"longName":"txtAddressNumber_14","assocDesc":"Gas StationA","editable":false,"value":"1000"},"lblCustomerNumber_13":{"id":13,"dataType":9,"bsvw":false,"title":"Customer Number","visible":true,"longName":"lblCustomerNumber_13","editable":false,"value":"Customer Number"},"lblDefault_296":{"id":296,"dataType":2,"bsvw":false,"title":"Default","visible":true,"longName":"lblDefault_296","editable":false,"value":"Default"},"txtDefaultIdentificationCodeQualifier1_297":{"id":297,"internalValue":" ","dataType":2,"bsvw":true,"title":"Default Identification Code Qualifier 1","visible":true,"longName":"txtDefaultIdentificationCodeQualifier1_297","assocDesc":".","editable":true,"value":""},"lblDefault_298":{"id":298,"dataType":2,"bsvw":false,"title":"Default","visible":true,"longName":"lblDefault_298","editable":false,"value":"Default"},"txtDefaultIdentificationCodeQualifier2_299":{"id":299,"internalValue":" ","dataType":2,"bsvw":true,"title":"Default Identification Code Qualifier 2","visible":true,"longName":"txtDefaultIdentificationCodeQualifier2_299","assocDesc":".","editable":true,"value":""},"lblPaymentTermsAR_29":{"id":29,"dataType":2,"bsvw":false,"title":"Payment Terms - A/R","visible":true,"longName":"lblPaymentTermsAR_29","editable":false,"value":"Payment Terms - A/R"},"txtARModelDocumentCompany_190":{"id":190,"internalValue":" ","dataType":2,"bsvw":true,"title":"A/R Model Document Company","visible":true,"longName":"txtARModelDocumentCompany_190","editable":true,"value":""},"lblModelJEDocTypeNoCo_191":{"id":191,"dataType":2,"bsvw":false,"title":"Model JE Doc Type / No / Co","visible":true,"longName":"lblModelJEDocTypeNoCo_191","editable":false,"value":"Model JE Doc Type / No / Co"},"txtARModelJEDocumentType_192":{"id":192,"internalValue":" ","dataType":2,"bsvw":true,"title":"Model JE Doc Type / No / Co","staticText":"Model JE Doc Type / No / Co","visible":true,"longName":"txtARModelJEDocumentType_192","editable":true,"value":""},"txtARModelJEDocument_193":{"id":193,"internalValue":0,"dataType":9,"bsvw":true,"title":"A/R Model JE Document","visible":true,"longName":"txtARModelJEDocument_193","editable":true,"value":"0"},"lblAccountNumber_194":{"id":194,"dataType":2,"bsvw":false,"title":"Account Number","visible":true,"longName":"lblAccountNumber_194","editable":false,"value":"Account Number"},"txtAccountNumber_195":{"id":195,"internalValue":" ","dataType":2,"bsvw":false,"title":"Account Number","staticText":"Account Number","visible":true,"longName":"txtAccountNumber_195","assocDesc":"","editable":true,"value":""},"lblDL01_196":{"id":196,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_196","editable":false,"value":""},"lblGLOffset_188":{"id":188,"dataType":2,"bsvw":false,"title":"G/L Offset","visible":true,"longName":"lblGLOffset_188","editable":false,"value":"G/L Offset"},"txtGLClass_189":{"id":189,"internalValue":" ","dataType":2,"bsvw":true,"title":"G/L Offset","staticText":"G/L Offset","visible":true,"longName":"txtGLClass_189","assocDesc":"","editable":true,"value":""},"txtCompany_20":{"id":20,"internalValue":"00000","dataType":2,"bsvw":true,"title":"Company","staticText":"Company","visible":true,"longName":"txtCompany_20","editable":false,"value":"00000"},"lblCurrencyCode_39":{"id":39,"dataType":2,"bsvw":false,"title":"Currency Code","visible":true,"longName":"lblCurrencyCode_39","editable":false,"value":"Currency Code"},"txtCurrencyCodeABAmounts_38":{"id":38,"internalValue":"USD","dataType":2,"bsvw":true,"title":"Currency Code - A/B Amounts","visible":true,"longName":"txtCurrencyCodeABAmounts_38","assocDesc":"U.S. Dollar","editable":true,"value":"USD"},"lblABAmountCodes_37":{"id":37,"dataType":2,"bsvw":false,"title":"A/B Amount Codes","visible":true,"longName":"lblABAmountCodes_37","editable":false,"value":"A/B Amount Codes"},"txtConfigurationPickandPack_280":{"id":280,"internalValue":" ","dataType":2,"bsvw":true,"title":"Configuration Pick and Pack","visible":true,"longName":"txtConfigurationPickandPack_280","assocDesc":"","editable":true,"value":""},"lblStandardCartonPackConfig_281":{"id":281,"dataType":2,"bsvw":false,"title":"Standard Carton Pack Config","visible":true,"longName":"lblStandardCartonPackConfig_281","editable":false,"value":"Standard Carton Pack Config"},"txtConfigurationStandardCartonPack_282":{"id":282,"internalValue":" ","dataType":2,"bsvw":true,"title":"Configuration Standard Carton Pack","visible":true,"longName":"txtConfigurationStandardCartonPack_282","assocDesc":"","editable":true,"value":""},"lblShippingLabelProgram_283":{"id":283,"dataType":2,"bsvw":false,"title":"Shipping Label Program","visible":true,"longName":"lblShippingLabelProgram_283","editable":false,"value":"Shipping Label Program"},"txtShippingLabelProgram_284":{"id":284,"internalValue":" ","dataType":2,"bsvw":true,"title":"Shipping Label Program","visible":true,"longName":"txtShippingLabelProgram_284","assocDesc":"","editable":true,"value":""},"lblPickPackConfig_279":{"id":279,"dataType":2,"bsvw":false,"title":"Pick Pack Config","visible":true,"longName":"lblPickPackConfig_279","editable":false,"value":"Pick Pack Config"},"txtPaymentInstrument_32":{"id":32,"internalValue":" ","dataType":1,"bsvw":true,"title":"Payment Instrument","visible":true,"longName":"txtPaymentInstrument_32","assocDesc":"Default (A/R & A/P)","editable":true,"value":""},"lblPaymentInstrument_31":{"id":31,"dataType":1,"bsvw":false,"title":"Payment Instrument","visible":true,"longName":"lblPaymentInstrument_31","editable":false,"value":"Payment Instrument"},"txtPaymentTermsAR_30":{"id":30,"internalValue":"P2","dataType":2,"bsvw":true,"title":"Payment Terms - A/R","visible":true,"longName":"txtPaymentTermsAR_30","assocDesc":"P2 payment term","editable":true,"value":"P2"},"txtParentNumber_46":{"id":46,"internalValue":0,"dataType":9,"bsvw":false,"title":"Parent Number","visible":true,"longName":"txtParentNumber_46","assocDesc":"","editable":true,"value":"0"},"lblParentNumber_45":{"id":45,"dataType":9,"bsvw":false,"title":"Parent Number","visible":true,"longName":"lblParentNumber_45","editable":false,"value":"Parent Number"},"lblShippingLabelVersion_285":{"id":285,"dataType":2,"bsvw":false,"title":"Shipping Label Version","visible":true,"longName":"lblShippingLabelVersion_285","editable":false,"value":"Shipping Label Version"},"txtShippingLabelVersion_286":{"id":286,"internalValue":" ","dataType":2,"bsvw":true,"title":"Shipping Label Version","visible":true,"longName":"txtShippingLabelVersion_286","editable":true,"value":""},"txtSendInvoiceto_42":{"id":42,"internalValue":"C","dataType":1,"bsvw":true,"title":"Send Invoice to","visible":true,"longName":"txtSendInvoiceto_42","assocDesc":"Customer","editable":true,"value":"C"},"lblSendInvoiceto_41":{"id":41,"dataType":1,"bsvw":false,"title":"Send Invoice to","visible":true,"longName":"lblSendInvoiceto_41","editable":false,"value":"Send Invoice to"},"txtCurrencyCode_40":{"id":40,"internalValue":" ","dataType":2,"bsvw":true,"title":"Currency Code","visible":true,"longName":"txtCurrencyCode_40","assocDesc":"","editable":true,"value":""},"lblAutoReceiptsExecutionList_59":{"id":59,"dataType":2,"bsvw":false,"title":"Auto Receipts Execution List","visible":true,"longName":"lblAutoReceiptsExecutionList_59","editable":false,"value":"Auto Receipts Execution List"},"chkAutoReceiptYN_56":{"id":56,"internalValue":"Y","dataType":1,"bsvw":true,"title":"Auto Receipt (Y/N)","visible":true,"longName":"chkAutoReceiptYN_56","editable":true,"value":"on"},"lblDL01_55":{"id":55,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_55","editable":false,"value":""},"lblDL01_54":{"id":54,"dataType":2,"bsvw":false,"title":"Customer","visible":true,"longName":"lblDL01_54","editable":false,"value":"Customer"},"chkHoldInvoices_51":{"id":51,"internalValue":"N","dataType":1,"bsvw":true,"title":"Hold Invoices","visible":true,"longName":"chkHoldInvoices_51","editable":true,"value":"off"},"txtPersonOpeningAccount_69":{"id":69,"internalValue":"KE5982437","dataType":2,"bsvw":true,"title":"Person Opening Account","visible":true,"longName":"txtPersonOpeningAccount_69","assocDesc":"","editable":true,"value":"KE5982437"},"lblPersonOpeningAccount_68":{"id":68,"dataType":2,"bsvw":false,"title":"Person Opening Account","visible":true,"longName":"lblPersonOpeningAccount_68","editable":false,"value":"Person Opening Account"},"txtTemporaryCreditMessage_67":{"id":67,"internalValue":" ","dataType":2,"bsvw":true,"title":"Temporary Credit Message","visible":true,"longName":"txtTemporaryCreditMessage_67","assocDesc":".","editable":true,"value":""},"lblDL01_392":{"id":392,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_392","editable":false,"value":""},"lblDL01_393":{"id":393,"dataType":2,"bsvw":false,"title":"U.S. Dollar","visible":true,"longName":"lblDL01_393","editable":false,"value":"U.S. Dollar"},"lblDL01_265":{"id":265,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_265","editable":false,"value":""},"lblTemporaryCreditMessage_66":{"id":66,"dataType":2,"bsvw":false,"title":"Temporary Credit Message","visible":true,"longName":"lblTemporaryCreditMessage_66","editable":false,"value":"Temporary Credit Message"},"txtCreditLimit_63":{"id":63,"internalValue":0,"dataType":9,"bsvw":true,"title":"Credit Limit","visible":true,"longName":"txtCreditLimit_63","assocDesc":"","editable":true,"value":"0"},"lblCreditLimit_62":{"id":62,"dataType":9,"bsvw":false,"title":"Credit Limit","visible":true,"longName":"lblCreditLimit_62","editable":false,"value":"Credit Limit"},"txtAutoReceiptsExecutionList_60":{"id":60,"internalValue":" ","dataType":2,"bsvw":true,"title":"Auto Receipts Execution List","staticText":"Auto Receipts Execution List","visible":true,"longName":"txtAutoReceiptsExecutionList_60","editable":true,"value":""},"lblDL01_79":{"id":79,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_79","editable":false,"value":"."},"lblDL01_78":{"id":78,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_78","editable":false,"value":"."},"txtCreditManager_480":{"id":480,"internalValue":" ","dataType":2,"bsvw":true,"title":"Credit Manager","visible":true,"longName":"txtCreditManager_480","assocDesc":".","editable":true,"value":""},"lblCreditManager_481":{"id":481,"dataType":2,"bsvw":false,"title":"Credit Manager","visible":true,"longName":"lblCreditManager_481","editable":false,"value":"Credit Manager"},"lblDL01_482":{"id":482,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_482","editable":false,"value":"."},"lblDL01_472":{"id":472,"dataType":2,"bsvw":false,"title":"Print/Mail","visible":true,"longName":"lblDL01_472","editable":false,"value":"Print/Mail"},"lblDL01_473":{"id":473,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_473","editable":false,"value":""},"lblDL01_474":{"id":474,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_474","editable":false,"value":"."},"txtDunBradstreet_233":{"id":233,"internalValue":" ","dataType":2,"bsvw":true,"title":"Dun Bradstreet","visible":true,"longName":"txtDunBradstreet_233","editable":true,"value":""},"lblDL01_475":{"id":475,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_475","editable":false,"value":"."},"lblDL01_476":{"id":476,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_476","editable":false,"value":"."},"chkCollectionReport_114":{"id":114,"internalValue":"Y","dataType":1,"bsvw":true,"title":"Collection Report","visible":true,"longName":"chkCollectionReport_114","editable":true,"value":"on"},"lblDL01_477":{"id":477,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_477","editable":false,"value":"."},"chkPrintStatement_115":{"id":115,"internalValue":"Y","dataType":1,"bsvw":true,"title":"Print Statement","visible":true,"longName":"chkPrintStatement_115","editable":true,"value":"on"},"chkDelinquencyFees_116":{"id":116,"internalValue":"N","dataType":1,"bsvw":true,"title":"Delinquency Fees","visible":true,"longName":"chkDelinquencyFees_116","editable":true,"value":"off"},"txtCreditMessage_77":{"id":77,"internalValue":" ","dataType":2,"bsvw":false,"title":"Credit Message","visible":true,"longName":"txtCreditMessage_77","assocDesc":".","editable":true,"value":""},"chkDelinquencyNotices_117":{"id":117,"internalValue":"Y","dataType":1,"bsvw":true,"title":"Delinquency Notices","visible":true,"longName":"chkDelinquencyNotices_117","editable":true,"value":"on"},"lblCreditMessage_76":{"id":76,"dataType":2,"bsvw":false,"title":"Credit Message","visible":true,"longName":"lblCreditMessage_76","editable":false,"value":"Credit Message"},"txtDateAccountOpened_75":{"id":75,"internalValue":"1299715200000","dataType":11,"bsvw":true,"title":"Date Account Opened","visible":true,"longName":"txtDateAccountOpened_75","editable":true,"value":"10/03/11"},"lblDateAccountOpened_74":{"id":74,"dataType":11,"bsvw":false,"title":"Date Account Opened","visible":true,"longName":"lblDateAccountOpened_74","editable":false,"value":"Date Account Opened"},"txtDateofLastCreditReview_73":{"id":73,"dataType":11,"bsvw":true,"title":"Date of Last Credit Review","visible":true,"longName":"txtDateofLastCreditReview_73","editable":true,"value":""},"lblDateofLastCreditReview_72":{"id":72,"dataType":11,"bsvw":false,"title":"Date of Last Credit Review","visible":true,"longName":"lblDateofLastCreditReview_72","editable":false,"value":"Date of Last Credit Review"},"txtRecallforReviewDate_71":{"id":71,"dataType":11,"bsvw":true,"title":"Recall for Review Date","visible":true,"longName":"txtRecallforReviewDate_71","editable":true,"value":""},"lblRecallforReviewDate_70":{"id":70,"dataType":11,"bsvw":false,"title":"Recall for Review Date","visible":true,"longName":"lblRecallforReviewDate_70","editable":false,"value":"Recall for Review Date"},"txtMinimumCashReceiptPercentage_492":{"id":492,"internalValue":0,"dataType":9,"bsvw":true,"title":"Minimum Cash Receipt Percentage","staticText":"Minimum Cash Receipt Percentage","visible":true,"longName":"txtMinimumCashReceiptPercentage_492","editable":true,"value":"0"},"lblMinimumCashReceiptPercentage_493":{"id":493,"dataType":9,"bsvw":false,"title":"Minimum Cash Receipt Percentage","visible":true,"longName":"lblMinimumCashReceiptPercentage_493","editable":false,"value":"Minimum Cash Receipt Percentage"},"txtCollectionManager_483":{"id":483,"internalValue":" ","dataType":2,"bsvw":true,"title":"Collection Manager","visible":true,"longName":"txtCollectionManager_483","assocDesc":"No collection mgr assigned","editable":true,"value":""},"lblLastReviewedBy_80":{"id":80,"dataType":2,"bsvw":false,"title":"Last Reviewed By","visible":true,"longName":"lblLastReviewedBy_80","editable":false,"value":"Last Reviewed By"},"lblCollectionManager_484":{"id":484,"dataType":2,"bsvw":false,"title":"Collection Manager","visible":true,"longName":"lblCollectionManager_484","editable":false,"value":"Collection Manager"},"lblDL01_485":{"id":485,"dataType":2,"bsvw":false,"title":"No collection mgr assigned","visible":true,"longName":"lblDL01_485","editable":false,"value":"No collection mgr assigned"},"lblDL01_486":{"id":486,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_486","editable":false,"value":""},"txtPolicyCompany_247":{"id":247,"internalValue":"00000","dataType":2,"bsvw":false,"title":"Policy Company","visible":true,"longName":"txtPolicyCompany_247","editable":true,"value":"00000"},"txtTRWRating_88":{"id":88,"internalValue":" ","dataType":2,"bsvw":true,"title":"TRW Rating","visible":true,"longName":"txtTRWRating_88","editable":true,"value":""},"txtTRWDate_87":{"id":87,"dataType":11,"bsvw":true,"title":"TRW Date","staticText":"TRW Date","visible":true,"longName":"txtTRWDate_87","editable":true,"value":""},"lblTRWDate_86":{"id":86,"dataType":11,"bsvw":false,"title":"TRW Date","visible":true,"longName":"lblTRWDate_86","editable":false,"value":"TRW Date"},"txtDunBradstreetDate_85":{"id":85,"dataType":11,"bsvw":true,"title":"Dun Bradstreet Date","staticText":"Dun Bradstreet Date","visible":true,"longName":"txtDunBradstreetDate_85","editable":true,"value":""},"lblDunBradstreetDate_84":{"id":84,"dataType":11,"bsvw":false,"title":"Dun Bradstreet Date","visible":true,"longName":"lblDunBradstreetDate_84","editable":false,"value":"Dun Bradstreet Date"},"txtFinancialStmtsonHand_83":{"id":83,"dataType":11,"bsvw":true,"title":"Financial Stmts on Hand","staticText":"Financial Stmts on Hand","visible":true,"longName":"txtFinancialStmtsonHand_83","assocDesc":"","editable":true,"value":""},"lblFinancialStmtsonHand_82":{"id":82,"dataType":11,"bsvw":false,"title":"Financial Stmts on Hand","visible":true,"longName":"lblFinancialStmtsonHand_82","editable":false,"value":"Financial Stmts on Hand"},"txtLastReviewedBy_81":{"id":81,"internalValue":" ","dataType":2,"bsvw":true,"title":"Last Reviewed By","visible":true,"longName":"txtLastReviewedBy_81","assocDesc":"","editable":true,"value":""},"txtABCCodeSales_91":{"id":91,"internalValue":"C","dataType":1,"bsvw":true,"title":"ABC Code Sales","staticText":"ABC Code Sales","visible":true,"longName":"txtABCCodeSales_91","assocDesc":"Grade C","editable":true,"value":"C"},"lblABCCodeSales_90":{"id":90,"dataType":1,"bsvw":false,"title":"ABC Code Sales","visible":true,"longName":"lblABCCodeSales_90","editable":false,"value":"ABC Code Sales"},"lblDL01_451":{"id":451,"dataType":2,"bsvw":false,"title":"Grade C","visible":true,"longName":"lblDL01_451","editable":false,"value":"Grade C"},"lblDL01_452":{"id":452,"dataType":2,"bsvw":false,"title":"P2 payment term","visible":true,"longName":"lblDL01_452","editable":false,"value":"P2 payment term"},"lblDL01_453":{"id":453,"dataType":2,"bsvw":false,"title":"Default (A/R & A/P)","visible":true,"longName":"lblDL01_453","editable":false,"value":"Default (A/R & A/P)"},"lblDL01_455":{"id":455,"dataType":2,"bsvw":false,"title":"Gas StationA","visible":true,"longName":"lblDL01_455","editable":false,"value":"Gas StationA"},"lblPolicyNameCompany_99":{"id":99,"dataType":2,"bsvw":false,"title":"Policy Name/Company","visible":true,"longName":"lblPolicyNameCompany_99","editable":false,"value":"Policy Name/Company"},"txtABCCodeAverageDays_95":{"id":95,"internalValue":"C","dataType":1,"bsvw":true,"title":"ABC Code Average Days","staticText":"ABC Code Average Days","visible":true,"longName":"txtABCCodeAverageDays_95","assocDesc":"Grade C","editable":true,"value":"C"},"lblABCCodeAverageDays_94":{"id":94,"dataType":1,"bsvw":false,"title":"ABC Code Average Days","visible":true,"longName":"lblABCCodeAverageDays_94","editable":false,"value":"ABC Code Average Days"},"txtABCCodeMargin_93":{"id":93,"internalValue":"C","dataType":1,"bsvw":true,"title":"ABC Code Margin","staticText":"ABC Code Margin","visible":true,"longName":"txtABCCodeMargin_93","assocDesc":"Grade C","editable":true,"value":"C"},"lblABCCodeMargin_92":{"id":92,"dataType":1,"bsvw":false,"title":"ABC Code Margin","visible":true,"longName":"lblABCCodeMargin_92","editable":false,"value":"ABC Code Margin"},"lblDL01_470":{"id":470,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_470","editable":false,"value":""},"lblDL01_471":{"id":471,"dataType":2,"bsvw":false,"title":"Inhibited From Processing","visible":true,"longName":"lblDL01_471","editable":false,"value":"Inhibited From Processing"},"txtAlternatePayor_461":{"id":461,"internalValue":1000,"dataType":9,"bsvw":true,"title":"Alternate Payor","visible":true,"longName":"txtAlternatePayor_461","assocDesc":"Gas StationA","editable":true,"value":"1000"},"lblAlternatePayor_462":{"id":462,"dataType":9,"bsvw":false,"title":"Alternate Payor","visible":true,"longName":"lblAlternatePayor_462","editable":false,"value":"Alternate Payor"},"txtPolicyName_100":{"id":100,"internalValue":" ","dataType":2,"bsvw":false,"title":"Policy Name","visible":true,"longName":"txtPolicyName_100","assocDesc":"Standard Policy","editable":true,"value":""},"lblDL01_463":{"id":463,"dataType":2,"bsvw":false,"title":"Gas StationA","visible":true,"longName":"lblDL01_463","editable":false,"value":"Gas StationA"},"lblDL01_103":{"id":103,"dataType":2,"bsvw":false,"title":"Standard Policy","visible":true,"longName":"lblDL01_103","editable":false,"value":"Standard Policy"},"txtSendMethod_467":{"id":467,"internalValue":" ","dataType":1,"bsvw":true,"title":"Send Method","visible":true,"longName":"txtSendMethod_467","assocDesc":"Print/Mail","editable":true,"value":""},"lblSendStatementto_105":{"id":105,"dataType":1,"bsvw":false,"title":"Send Statement to","visible":true,"longName":"lblSendStatementto_105","editable":false,"value":"Send Statement to"},"lblSendMethod_468":{"id":468,"dataType":1,"bsvw":false,"title":"Send Method","visible":true,"longName":"lblSendMethod_468","editable":false,"value":"Send Method"},"txtSendStatementto_106":{"id":106,"internalValue":"C","dataType":1,"bsvw":true,"title":"Send Statement to","visible":true,"longName":"txtSendStatementto_106","assocDesc":"Customer","editable":true,"value":"C"},"lblStatementCycle_107":{"id":107,"dataType":2,"bsvw":false,"title":"Statement Cycle","visible":true,"longName":"lblStatementCycle_107","editable":false,"value":"Statement Cycle"},"txtStatementCycle_108":{"id":108,"internalValue":"G","dataType":2,"bsvw":true,"title":"Statement Cycle","visible":true,"longName":"txtStatementCycle_108","assocDesc":"","editable":true,"value":"G"},"lblDL01_430":{"id":430,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_430","editable":false,"value":""},"chkIdentificationCode2_310":{"id":310,"internalValue":" ","dataType":1,"bsvw":true,"title":"Identification Code 2","visible":true,"longName":"chkIdentificationCode2_310","editable":true,"value":"off"},"lblDL01_431":{"id":431,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_431","editable":false,"value":""},"chkReferenceNumber1_311":{"id":311,"internalValue":" ","dataType":1,"bsvw":true,"title":"Reference Number 1","visible":true,"longName":"chkReferenceNumber1_311","editable":true,"value":"off"},"lblDL01_432":{"id":432,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_432","editable":false,"value":""},"chkReferenceNumber2_312":{"id":312,"internalValue":" ","dataType":1,"bsvw":true,"title":"Reference Number 2","visible":true,"longName":"chkReferenceNumber2_312","editable":true,"value":"off"},"chkWeight_313":{"id":313,"internalValue":" ","dataType":1,"bsvw":true,"title":"Weight","visible":true,"longName":"chkWeight_313","editable":true,"value":"off"},"lblDL01_434":{"id":434,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_434","editable":false,"value":""},"chkRouting_314":{"id":314,"internalValue":" ","dataType":1,"bsvw":true,"title":"Routing","visible":true,"longName":"chkRouting_314","editable":true,"value":"off"},"lblDL01_435":{"id":435,"dataType":2,"bsvw":false,"title":"Grade C","visible":true,"longName":"lblDL01_435","editable":false,"value":"Grade C"},"lblDL01_436":{"id":436,"dataType":2,"bsvw":false,"title":"Grade C","visible":true,"longName":"lblDL01_436","editable":false,"value":"Grade C"},"lblDL01_437":{"id":437,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_437","editable":false,"value":""},"lblDL01_438":{"id":438,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_438","editable":false,"value":""},"lblDL01_440":{"id":440,"dataType":2,"bsvw":false,"title":"Customer","visible":true,"longName":"lblDL01_440","editable":false,"value":"Customer"},"lblDL01_441":{"id":441,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_441","editable":false,"value":""},"txtTaxRateArea_200":{"id":200,"internalValue":" ","dataType":2,"bsvw":true,"title":"Tax Rate/Area","staticText":"Tax Rate/Area","visible":true,"longName":"txtTaxRateArea_200","assocDesc":"","editable":true,"value":""},"lblDL01_442":{"id":442,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_442","editable":false,"value":""},"lblPersonCorporationCode_201":{"id":201,"dataType":1,"bsvw":false,"title":"Person/Corporation Code","visible":true,"longName":"lblPersonCorporationCode_201","editable":false,"value":"Person/Corporation Code"},"lblDL01_443":{"id":443,"dataType":2,"bsvw":false,"title":".","visible":true,"longName":"lblDL01_443","editable":false,"value":"."},"txtPersonCorporationCode_202":{"id":202,"internalValue":" ","dataType":1,"bsvw":false,"title":"Person/Corporation Code","staticText":"Person/Corporation Code","visible":true,"longName":"txtPersonCorporationCode_202","assocDesc":"","editable":true,"value":""},"lblDL01_444":{"id":444,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_444","editable":false,"value":""},"lblDL01_445":{"id":445,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_445","editable":false,"value":""},"lblDL01_446":{"id":446,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_446","editable":false,"value":""},"lblAddlIndTaxID_205":{"id":205,"dataType":2,"bsvw":false,"title":"Add'l Ind Tax ID","visible":true,"longName":"lblAddlIndTaxID_205","editable":false,"value":"Add'l Ind Tax ID"},"lblDL01_447":{"id":447,"dataType":2,"bsvw":false,"title":"","visible":true,"longName":"lblDL01_447","editable":false,"value":""},"txtAddlIndTaxID_206":{"id":206,"internalValue":" ","dataType":2,"bsvw":false,"title":"Add'l Ind Tax ID","staticText":"Add'l Ind Tax ID","visible":true,"longName":"txtAddlIndTaxID_206","editable":true,"value":""}},"errors":[],"warnings":[]},"stackId":1,"stateId":1,"rid":"4ae1eb3fc80475ec","currentApp":"P03013_W03013B_ZJDE0001","timeStamp":"2017-12-14:20.51.40","sysErrors":[],"deprecated":true }

Very simply, we are loading up this form in JDE (Customer master) for address book number 1000 and company 00000. We are asking JDE to return the entire screen to us.

JDE returns the entire form in JSON

Wow, that is cool.

https://docs.oracle.com/cd/E53430_01/EOTRS/op-formservice-post.html look here to reduce the data coming back (returnControlIDs)

{

"token": "044JEAxluwIRZ6N3gXo/EernNMbxcCRhUxZZnfFiOE7bGY=MDE5MDA4OTExNzQxMDc0NDY3NDc4NTMxM015RGV2aWNlMTUxMzI0NDkxNjg1Mg==",

"version": "ZJDE0001",

"formInputs": [

{

"id":7,

"value": 1000

},

{

"id":8,

"value": "00000"

}

],

"returnControlIDs":"30|461|401",

"deviceName": "MyDevice",

"formName": "P03013_W03013B_ZJDE0001"

}

Just say you only want a couple of control ID’s, then use the above.

{"fs_P03013_W03013B":{"title":"Customer Master Revision","data":{"txtPaymentTermsAR_30":{"id":30,"internalValue":"P2","dataType":2,"bsvw":true,"title":"Payment Terms - A/R","visible":true,"longName":"txtPaymentTermsAR_30","assocDesc":"P2 payment term","editable":true,"value":"P2"},"txtAlternatePayor_461":{"id":461,"internalValue":1000,"dataType":9,"bsvw":true,"title":"Alternate Payor","visible":true,"longName":"txtAlternatePayor_461","assocDesc":"Gas StationA","editable":true,"value":"1000"},"txtBatchProcessingMode_401":{"id":401,"internalValue":"I","dataType":1,"bsvw":true,"title":"Batch Processing Mode","visible":true,"longName":"txtBatchProcessingMode_401","assocDesc":"Inhibited From Processing","editable":true,"value":"I"}},"errors":[],"warnings":[]},"stackId":2,"stateId":1,"rid":"4ae1eb3fc80475ec","currentApp":"P03013_W03013B_ZJDE0001","timeStamp":"2017-12-14:21.01.18","sysErrors":[],"deprecated":true }

Got our AIS ID tool in your chrome browser? JDE-xpansion

When you sign into JDE and use sift left click:

How nice is that, shows you the ID and puts it in your clip board. Sweet!

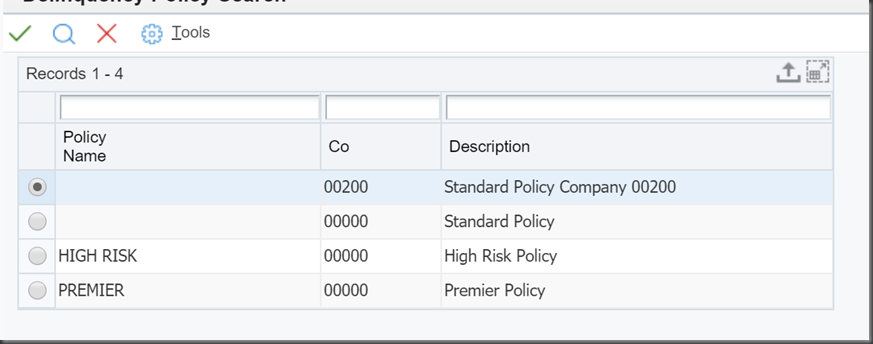

Finally let’s change some data!

I’m going to change

I found that with JDE-xpansion to be 100

First I try:

{

"token": "044JEAxluwIRZ6N3gXo/EernNMbxcCRhUxZZnfFiOE7bGY=MDE5MDA4OTExNzQxMDc0NDY3NDc4NTMxM015RGV2aWNlMTUxMzI0NDkxNjg1Mg==",

"version": "ZJDE0001",

"formInputs": [

{

"id":7,

"value": 1000

},

{

"id":8,

"value": "00000"

}

],

"formActions":[

{

"command": "SetControlValue",

"value": "PREMIERE",

"controlID": "100"

}

],

"returnControlIDs":"30|461|401",

"deviceName": "MyDevice",

"formName": "P03013_W03013B_ZJDE0001"

}

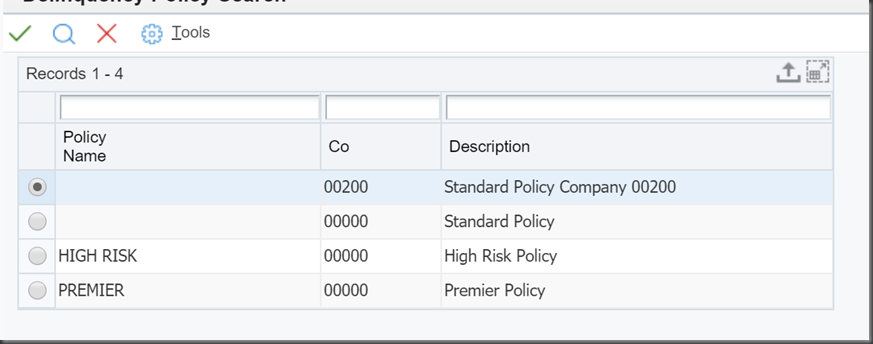

And I get an ERROR, but the return is 200

{"fs_P03013_W03013B":{"title":"Customer Master Revision","data":{"txtPaymentTermsAR_30":{"id":30,"internalValue":"P2","dataType":2,"bsvw":true,"title":"Payment Terms - A/R","visible":true,"longName":"txtPaymentTermsAR_30","assocDesc":"P2 payment term","editable":true,"value":"P2"},"txtAlternatePayor_461":{"id":461,"internalValue":1000,"dataType":9,"bsvw":true,"title":"Alternate Payor","visible":true,"longName":"txtAlternatePayor_461","assocDesc":"Gas StationA","editable":true,"value":"1000"},"txtBatchProcessingMode_401":{"id":401,"internalValue":"I","dataType":1,"bsvw":true,"title":"Batch Processing Mode","visible":true,"longName":"txtBatchProcessingMode_401","assocDesc":"Inhibited From Processing","editable":true,"value":"I"}},"errors":[{"CODE":"029X","TITLE":"Error: Policy Invalid","ERRORCONTROL":"100","DESC":"CAUSE . . . The policy name entered is not found in the A/R Delinquency Policy\\u000a Master file(F03B25).\\u000aRESOLUTION. .Enter a valid policy name.","MOBILE":"The policy name entered is not found in the A/R Delinquency Policy\\u000a Master file(F03B25)."}],"warnings":[]},"stackId":3,"stateId":1,"rid":"4ae1eb3fc80475ec","currentApp":"P03013_W03013B_ZJDE0001","timeStamp":"2017-12-14:21.06.50","sysErrors":[],"deprecated":true }

So the error is clear that the spelling is wrong for

So you are going to need to check for the errors string array

When it works

{"fs_P03013_W03013B":{"title":"Customer Master Revision","data":{"txtPaymentTermsAR_30":{"id":30,"internalValue":"P2","dataType":2,"bsvw":true,"title":"Payment Terms - A/R","visible":true,"longName":"txtPaymentTermsAR_30","assocDesc":"P2 payment term","editable":true,"value":"P2"},"txtAlternatePayor_461":{"id":461,"internalValue":1000,"dataType":9,"bsvw":true,"title":"Alternate Payor","visible":true,"longName":"txtAlternatePayor_461","assocDesc":"Gas StationA","editable":true,"value":"1000"},"txtBatchProcessingMode_401":{"id":401,"internalValue":"I","dataType":1,"bsvw":true,"title":"Batch Processing Mode","visible":true,"longName":"txtBatchProcessingMode_401","assocDesc":"Inhibited From Processing","editable":true,"value":"I"}},"errors":[],"warnings":[]},"stackId":4,"stateId":1,"rid":"4ae1eb3fc80475ec","currentApp":"P03013_W03013B_ZJDE0001","timeStamp":"2017-12-14:21.09.21","sysErrors":[],"deprecated":true }

See that errors is []

note also, I’m not hitting okay above, just checking!

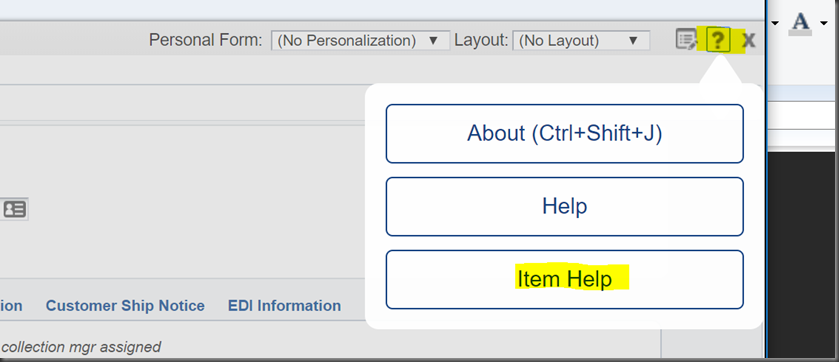

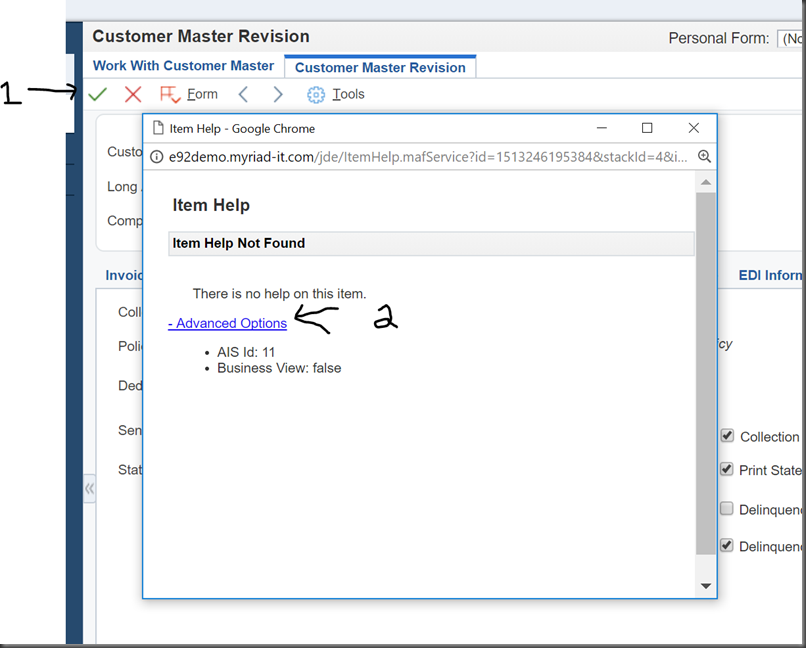

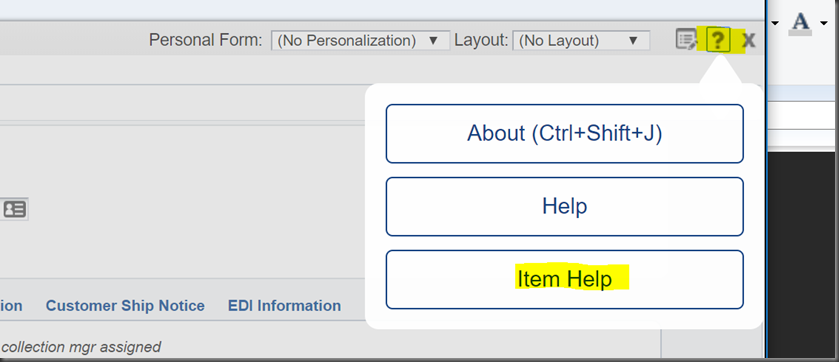

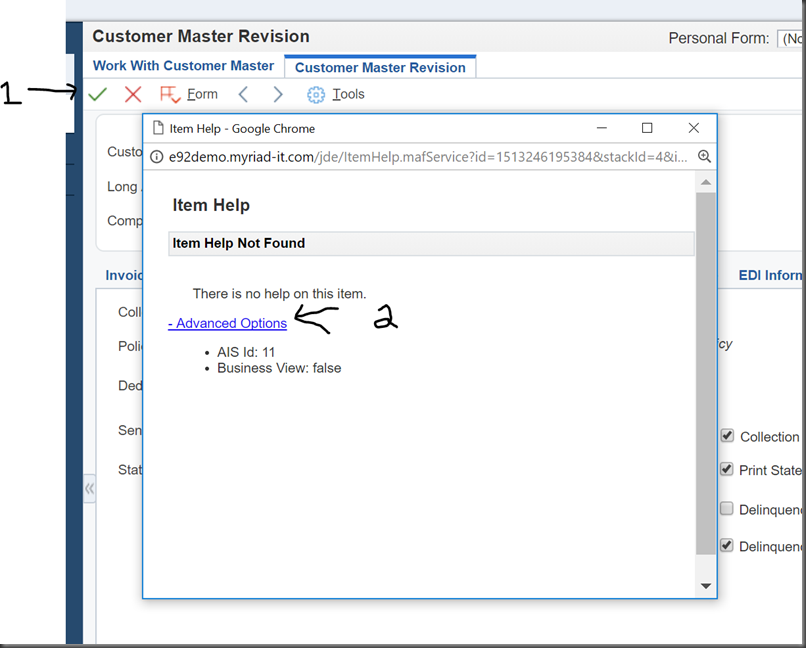

What control is OK, need to use ITEM help

The click the OK button

AIS ID 11

{

"token": "044JEAxluwIRZ6N3gXo/EernNMbxcCRhUxZZnfFiOE7bGY=MDE5MDA4OTExNzQxMDc0NDY3NDc4NTMxM015RGV2aWNlMTUxMzI0NDkxNjg1Mg==",

"version": "ZJDE0001",

"formInputs": [

{

"id":7,

"value": 1000

},

{

"id":8,

"value": "00000"

}

],

"formActions":[

{

"command": "SetControlValue",

"value": "PREMIER",

"controlID": "100"

},

{

"command": "DoAction",

"controlID": "11"

}

],

"returnControlIDs":"30|461|401",

"deviceName": "MyDevice",

"formName": "P03013_W03013B_ZJDE0001"

}

{"fs_P03013_W03013B":{"title":"Customer Master Revision","data":{"txtPaymentTermsAR_30":{"id":30,"internalValue":"P2","dataType":2,"bsvw":true,"title":"Payment Terms - A/R","visible":true,"longName":"txtPaymentTermsAR_30","assocDesc":"P2 payment term","editable":true,"value":"P2"},"txtAlternatePayor_461":{"id":461,"internalValue":1000,"dataType":9,"bsvw":true,"title":"Alternate Payor","visible":true,"longName":"txtAlternatePayor_461","assocDesc":"Gas StationA","editable":true,"value":"1000"},"txtBatchProcessingMode_401":{"id":401,"internalValue":"I","dataType":1,"bsvw":true,"title":"Batch Processing Mode","visible":true,"longName":"txtBatchProcessingMode_401","assocDesc":"Inhibited From Processing","editable":true,"value":"I"}},"errors":[],"warnings":[]},"stackId":5,"stateId":1,"rid":"4ae1eb3fc80475ec","currentApp":"P03013_W03013B_ZJDE0001","timeStamp":"2017-12-14:21.14.41","sysErrors":[],"deprecated":true }

errors[]

I think that you might have just written your first AIS based code – pretty neat.

Next will be some advanced orchestration for you.