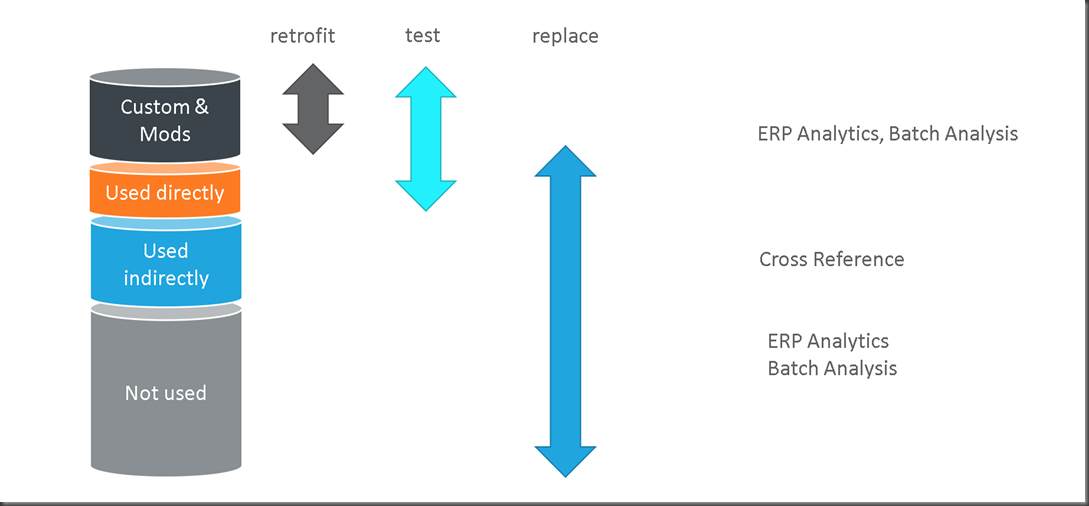

At Fusion5 we are doing lots of upgrades all of the time, so we need to understand our clients technical debt. We strive to make every upgrade more cost efficient and easier. This is easier said than done, but let me mention a couple of ways which we do this:

Intelligent and consistent use of category codes for objects. One of the code is specifically about retrofit and needs to be completed when the object is created. This is "retrofit needed" - sounds simple I know. But, if you create something bespoke - that never needs to be retrofitted - the best thing you can do it mark it like that. Therefore lots of time will be saved looking at this object in the future (again and again).

Replace modifications with configuration. UDO's have made this better and easier and continue to do so. If you are retrofitting and you think - hey - I could do this with a UDO - please do yourself a favour and configure a UDO and don't touch the code! Security is also an important concept for developers to understand completely. Because - guess what? You can use security to force people to enter something into the QBE line - you don't need to use code. (Application Query Security)

- Everyone needs to understand UDO's well. We all have a role in simplification.

|

| If you don't know what EVERY one of these are - you need to know! |

OCM's can be used for force keyed queries. Wow!!! Did you know that you can create a specific OCM that forces people to only use keyed fields for QBE - awesome. So simple. I know that there is code out there that enforces this. This is like the above tip for security.