I want my users to be productive, I want them to be able to use their familiar environment.

I know that there has been a lot of change in the later releases, especially with UDO’s – I’ve documented a bit of this in some posts. I’m going to deal quite specifically with F98950 and F952440 – as F952440 is the new F98950. When you are on a new tools release, the user overrides are read from F952440 instead of F98950 – nice and easy. But you need to get them there!

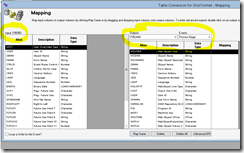

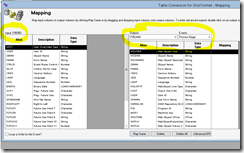

There is a defined method, that is calling R89952440 Table conversion for grid format – simple! You reckon?

How do I tell it where it’s going to get F98950 from?

It’s a TC, so you specify input and output environments, so input might be UA910 and output might be UA920 – that makes sense!

So with that explained, let’s look a little deeper, as what I’ve found (and what others have told me) – is that if you have records in F9860W or F9861W for Grid formats, the process breaks down!

Firstly, your roles need to exist (and users) for this to work. Look at this code without error handling:

So now we get to the F9860W logic

If there is a record that matches on description, webObjectType, Object, Form and version…

But this is the 60, not the 61W. It seems to me that if ANY environment has this value the GD won’t come forward.

My brain cannot handle the logic in this UBE, but let me give your the facts. If I leave F9860W and F9861W alone and run the conversion only 7900ish Grid formats come over. Aarrgghhh!!!

If I clean out the F9860W and F9861W and then run it, more than 12000 come over! You be the judge. Even if I remove the records for PP920 only in F9861W – I do not get all of my Grids come over. This area needs some work.

Here is what you need to do if you want all of your grids to come over for an upgrade:

AS/400 syntax – sorry!

first backup ol920 to a *savf

-- these are the statements that do the actual work

create table ol920.f9860ws as (select * from ol920.f9860w where wowotyp='FORMAT') with data;

delete from ol920.f9861w where sipathcd = 'PP920' and siwobnm in (select wowobnm from ol920.f9860w where wowotyp='FORMAT');

delete from ol920.f9860w where wowotyp='FORMAT';

--so now you have no formats in the F9860W or F9861W – this is fine as these tables are all about transfers not runtime!

--run conversion. create your own version of R89952440 from PLANNER. For this TC, From is F98950 location, TO is F952440 location. make sure that the F98950 is also in the PP920 library if you are using too and from as the same libraries.

-- Now, patch your F9860W insert any missing formats from other environments.

insert into ol920.f9860w select * from ol920.f9860ws t2 where not exists (select 1 from ol920.f9860w t3 where t2.wowobnm = t3.wowobnm and t3.WOWOTYP = 'FORMAT') ;

Job done! Time to reconcile with this little bad boy (Thanks to Shae again)

select a.* from copp920.f98950 a left outer join copp920.f952440 b on

(UOUSER=GFWOUSER and UOOBNM=GFOBNM and UOVERS=GFVERS and UOFMNM = GFFMNM and

UOSEQ = GFSEQ)

where GFOBNM is null and UOUOTY in ('GD')

42 did not make it, which matches the debug log EXACTLY!

Opening UBE Log for report R89952440, version SIMPP920

TCEngine Level 1 ..\..\common\TCEngine\tcinit.c : Input F98950 is using data source Central Objects - PP920.

TCEngine Level 1 ..\..\common\TCEngine\tcinit.c : Output F952440 is using data source Central Objects - PP920.

TCEngine Level 1 ..\..\common\TCEngine\tcinit.c : Conversion method is Row by Row.

TCEngine Level 1 ..\..\common\TCEngine\tcdump.c :

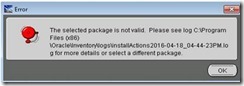

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 0 ..\..\common\TCEngine\tcrun.c : TCE009143 - Insert Row failed for table F952440.

TCEngine Level 1 ..\..\common\TCEngine\tcrun.c : Conversion R89952440 SIMPP920 done successfully. Elapsed time - 301.060000 Seconds.

UBE Job Finished SuccessFully.