This script has the bones of everything you need to see how many of your media object records actually have physical files. You could augment this with curl and a bunch of smarts to to URL’s also, but my script is simple for the time being.

Note that you need to create your reconciliation table:

drop table jde.f00165srm;

create table jde.f00165srm as select GDOBNM, GDTXKY, GDMOSEQN, ' ' AS FILEEXISTS from crpdta.f00165 where 1=0 ;

This is the vbs script, just create and paste. you’ll need to create an ODBC with the name TVLNE8 (in this example)

Script:

fileSuffix=Left(FormatDateTime(dateStamp, 1),6) & day(dateStamp) & monthName(month(dateStamp)) & hour(dateStamp)

Const ForAppending = 8

logfile="h:\data\myr" & fileSuffix & ".txt"

Set objFSO = CreateObject("Scripting.FileSystemObject")

set objTextFile = objFSO.OpenTextFile(logfile, ForAppending, True)

DIM fso

Set fso = CreateObject("Scripting.FileSystemObject")

Dim Oracon

set oraccon = wscript.createobject("ADODB.Connection")

Dim recset

set recset = wscript.createobject("ADODB.Recordset")

set Insertresults = wscript.createobject("ADODB.Recordset")

set f98moquerecset = wscript.createobject("ADODB.Recordset")

Dim cmd

set cmd = wscript.createobject("ADODB.Command")

set cmdInsert = wscript.createobject("ADODB.Command")

set cmdf98moque = wscript.createobject("ADODB.Command")

Set Oracon = wscript.CreateObject("ADODB.Connection")

Oracon.ConnectionString = "DSN=TVLNE8;" & _

"User ID=jde;" & _

"Password=jde;"

Oracon.Open

Set cmd.ActiveConnection = Oracon

Set cmdInsert.ActiveConnection = Oracon

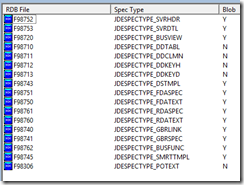

cmd.CommandText = "Select gdobnm, gdtxky, gdmoseqn, gdgtfilenm, gdgtmotype, gdgtitnm, gdqunam from crpdta.F00165 where gdgtmotype in ('5','2','1') and gdobnm like 'GT%'"

'cmd.CommandText = "Select gdobnm, gdtxky, gdgtfilenm, gdgtmotype, gdgtitnm, gdqunam from proddta.F00165_91 where gdgtmotype in ('1')"

Set recset = cmd.Execute

while (recset.EOF=false)

'Generally this would be in a function, but it needs to be fast!!

'NOte that type 2 and 1 cld be same function, as they are based on queues

'but i'm doing an upgrade, and it's complicated... this hard code of DIR

'does not really need to be there

if recset("GDGTMOTYPE") = "2" then

filenametotest = "D:\MEDIAOBJ\Oleque\" & trim(recset("GDGTFILENM"))

elseif recset("GDGTMOTYPE") = "1" then

filenametotest = moqueue(trim(recset("GDQUNAM"))) & "\" & trim(recset("GDGTFILENM"))

'wscript.echo "queue (" & trim(recset("GDQUNAM")) & ") path (" & moqueue(trim(recset("GDQUNAM"))) & ")"

else

filenametotest = trim(recset("GDGTFILENM"))

end if

If (fso.FileExists(filenametotest)) Then

'WScript.Echo("File exists! TP(" & recset("GDGTMOTYPE") &"): " & filenametotest)

fileexistsstatus="YES"

Else

fileexistsstatus="NO"

'WScript.Echo("File does not exist! TP(" & recset("GDGTMOTYPE") &"): " & filenametotest)

End If

cmdInsert.CommandTExt = "INSERT INTO JDE.f00165SRM (GDOBNM, GDTXKY, GDMOSEQN, FILEEXISTS) values ('" & _

recset("GDOBNM") & "', '" & _

recset("GDTXKY") & "', '" & _

recset("GDMOSEQN") & "', '" & _

fileexistsstatus & "')"

'wscript.echo "SQL:" & cmdInsert.CommandTExt

Set insertrecset = cmdInsert.Execute

recset.MoveNext

'wscript.echo recset("gdgtfilenm")

'tuple=" "

wend

set recset = nothing

oracon.close

set oracon = nothing

Check the progress of the script with:

select * from jde.f00165srm;

select count(*) from jde.f00165srm;

Now look at the results. Note that this also demonstrates a handy left outer with multiple join items.

select t1.gdtxky, t1.gdobnm, t1.fileexists, t2.gdgtfilenm from jde.f00165srm t1 left outer join crpdta.f00165 t2 on t1.gdobnm = t2.gdobnm and t1.gdtxky = t2.gdtxky and t1.gdmoseqn = t2.gdmoseqn;