This post shows a rapid method of converting world tables into OneWorld tables. This is not for the faint of heart. If you only like doing things that are in official oracle manuals, please close this browser window and forget you visited this page. Starting printing off the hundreds of table definitions and 750 custom DD items. Then sign into E1, create a project and being typing. See you in a month! Oh and when you make a typo on 1 DD item or order the columns wrong, good luck finding it…

If you are more adventurous (in a very nerdy kinda way), keep reading (even if it is between your fingers as your hands cover your eyes).

Note that this procedure also allows for the copy to an oracle database, this is not mandatory for it to work.

This could be one of the largest cowboys you’ve ever read about.

Tables in OneWorld need the following to be valid:

· F9860 record

· F9861 record

· DD items / columns

· F98710 record – table header

· F98711 record(s) – table detail (row for each column)

· F98712 record – key header

· F98713 record – key details

So we are going to build all of the above with SQL from the AS/400 table definitions and catalogs.

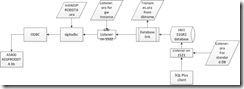

Copy data from AS/400 to oracle. http://shannonscncjdeblog.blogspot.com.au/2014/05/using-heterogeneous-database-services.html

Use procedure outlined in “Creating ODBC connectivity from Oracle using heterogeneous database services”.

This is done with a simple set of “CREATE TABLE AS select * FROM” statements using the database link that you establish from the AS/400.

I was able to move about 30GB of data in 4 hours, this was the contents of 110 custom tables with about 165000000 rows.

I have created my custom tables in CRPDTA.

--110 table header entries

insert into dv910.f98710 select 0, trim(table_name), 0,0,0,'E910','','','',''

from all_tables t1

where t1.owner = 'CRPDTA'

and (t1.table_name like 'F55%'

or t1.table_name like 'F56%'

or t1.table_name like 'F57%'

or t1.table_name like 'F58%'

or t1.table_name like 'F59%')

;

commit;

insert into dv910.f98711

select 0,table_name,0,trim(substr(column_name,3,10)),column_id,trim(column_name),0,'E910',' ',' ',' ',' '

from all_tab_columns where owner = 'CRPDTA'

and (table_name like 'F55%'

or table_name like 'F56%'

or table_name like 'F57%'

or table_name like 'F58%'

or table_name like 'F59%');

commit;

Note that I was creating a read only environment, so I did not care for the key or the format of the key. E1 just needs a PK. I chose the first column of the table.

insert into dv910.f98712 select 0, trim(table_name), 1,'Primary Index','1','1',0,'E910','','','',''

from all_tables t1

where t1.owner = 'CRPDTA'

and (t1.table_name like 'F55%'

or t1.table_name like 'F56%'

or t1.table_name like 'F57%'

or t1.table_name like 'F58%'

or t1.table_name like 'F59%');

commit

;

insert into dv910.f98713

select 0,trim(table_name),1,'Primary Key', 0, trim(substr(column_name,3,10)),1,'A',0,'E910',' ', ' ', ' ', ' '

from all_tab_columns where owner = 'CRPDTA'

and column_id = 1

and (table_name like 'F55%'

or table_name like 'F56%'

or table_name like 'F57%'

or table_name like 'F58%'

or table_name like 'F59%');

commit;

insert into dd910.f9200

select distinct trim(substr(column_name,3,10)), '55', '55', 'D', 'NDENT', 'SQL', 114100, 'MYPC', 6000

from all_tab_columns where owner = 'CRPDTA'

and (table_name like 'F55%'

or table_name like 'F56%'

or table_name like 'F57%'

or table_name like 'F58%'

or table_name like 'F59%')

and not exists

(select 1 FROM dd910.f9200

where trim(frdtai) = trim(substr(column_name,3,10))

)

;

commit;

insert into dd910.f9203

select distinct trim(substr(column_name,3,10)), ' ', ' ', 'DEfault Description', 'DEFAULT DESCRIPTION', ' '

from all_tab_columns where owner = 'CRPDTA'

and (table_name like 'F55%'

or table_name like 'F56%'

or table_name like 'F57%'

or table_name like 'F58%'

or table_name like 'F59%')

and not exists

(select 1 FROM dd910.f9203

where trim(frdtai) = trim(substr(column_name,3,10))

)

;

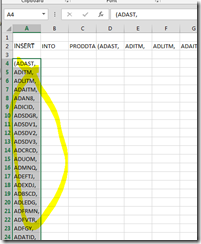

This was a little harder, I could have used decode statements in my SQL, but I was getting tired. I used the inserted spreadsheet (with formulas) to build the insert statements.

Note that the DD could have been done much better if the world -> E1 DD merge had been run, this would give proper descriptions. Once again, I can update the descriptions later if I care via the database link and knowing where to find them. My issue was that the CCSID conversion was not run over the world DD, therefore all of the columns in the DD files where junk when I tried to select them.

--741 custom DD items (not in 910 DD)

select distinct substr(column_name,3,10), data_type, data_length from all_tab_columns where owner = 'CRPDTA'

and (table_name like 'F55%'

or table_name like 'F56%'

or table_name like 'F57%'

or table_name like 'F58%'

or table_name like 'F59%')

and not exists

(select 1 FROM dd910.f9210

where trim(frdtai) = trim(substr(column_name,3,10))

)

;

Formula for spreadsheet:

=+CONCATENATE("INSERT INTO DD910.F9210 values('",TRIM(MID(C837,3,6)),"',' ','S',",G837,",0,' ',0,' ',' ','",H837,"',' ',' ', ' ', ' ', ' ', ' ', ' ',0,' ',' ','SMOIRPC',114250,' ','SMOIR',9000, 'Description",ROW(),"', ",IF(D837="NUMBER",9,IF(D837="CHAR",2,11)),",4,'N','N','N',' ',' ',' ',' ',' ',0",",' ',0,' ',0,' ',0,' ','N',0,'N' ",");")

Which generates:

| INSERT INTO DD910.F9210 values('$A01',' ','S',3,0,' ',0,' ',' ','',' ',' ', ' ', ' ', ' ', ' ', ' ',0,' ',' ','SMOIRPC',114250,' ','SMOIR',9000, 'Description837', 2,4,'N','N','N',' ',' ',' ',' ',' ',0,' ',0,' ',0,' ',0,' ','N',0,'N' ); |

| INSERT INTO DD910.F9210 values('$A02',' ','S',3,0,' ',0,' ',' ','',' ',' ', ' ', ' ', ' ', ' ', ' ',0,' ',' ','SMOIRPC',114250,' ','SMOIR',9000, 'Description838', 2,4,'N','N','N',' ',' ',' ',' ',' ',0,' ',0,' ',0,' ',0,' ','N',0,'N' ); |

| INSERT INTO DD910.F9210 values('$A03',' ','S',3,0,' ',0,' ',' ','',' ',' ', ' ', ' ', ' ', ' ', ' ',0,' ',' ','SMOIRPC',114250,' ','SMOIR',9000, 'Description839', 2,4,'N','N','N',' ',' ',' ',' ',' ',0,' ',0,' ',0,' ',0,' ','N',0,'N' ); |

insert into ol910.f9861

select distinct trim(t1.table_name), 'NZAKLEVFN740',' ', 'SMOIR', 114122, 'E910', '7894561','1',' ', ' ', ' ', ' ', 'DV910', ' ', 'COWBOY', 'NDENT',114200,8000

from all_tables t1

where t1.owner = 'CRPDTA'

and (t1.table_name like 'F55%'

or t1.table_name like 'F56%'

or t1.table_name like 'F57%'

or t1.table_name like 'F58%'

or t1.table_name like 'F59%')

;

commit ;

insert into ol910.f9860

select distinct trim(t1.table_name), trim(t2.table_text) || ' ','55','55', 'TBLE', '2', max(substr(t3.column_name,1,2)),'C',' ', ' ',' ','N',' ','N',0,' ',' ',' ',' ', ' ', ' ', ' ', 0, ' ', ' ', 'JDBTRIG', ' ', ' ', ' ', ' ', ' ', ' ', ' ', 'COWBOY', 'SMOIR', 'SMOIR', 115000, 5000

from all_tables t1, all_tab_columns t3, qsys2.systables@a01proddta t2

where t1.owner = 'CRPDTA'

and (t1.table_name like 'F55%'

or t1.table_name like 'F56%'

or t1.table_name like 'F57%'

or t1.table_name like 'F58%'

or t1.table_name like 'F59%')

and t2.table_schema = 'A73A01DTA'

and trim(t1.table_name) = trim(t2.table_name)

and trim(t2.table_name) = trim(t3.table_name)

and t3.owner = 'CRPDTA'

and not exists

(select 1 from ol910.f9860 where trim(siobnm) = trim(t2.table_name))

group by trim(t1.table_name), trim(t2.table_text) || ' ','55','55', 'TBLE', '2','C',' ', ' ',' ','N',' ','N',0,' ',' ',' ',' ', ' ', ' ', ' ', 0, ' ', ' ', 'JDBTRIG', ' ', ' ', ' ', ' ', ' ', ' ', ' ', 'COWBOY', 'SMOIR', 'SMOIR', 115000, 5000 ;

commit ;

Damn that 400 for starting table columns with a $ sign, you cannot do this in oracle. Of course the FIRST table of 110 that I test in E1 has a $ as the first char of the columns. This is defined in F98711 and F9860 and the table itself. So I need to change all of these quickly.

update ol910.F9860 set sipfx = replace(sipfx,'$','Z') where sifuno = 'TBLE' and SIPFX like '$%';

update dv910.f98711 set tdsqlc = replace(tdsqlc,'$','Z') where tdsqlc like '$%' ;

SELECT 'ALTER TABLE CRPDTA.' || table_name || ' RENAME COLUMN "' || column_name || '" TO ' || replace(column_name,'$','Z') ||';'

from all_tab_columns t1

where t1.owner = 'CRPDTA'

and (t1.table_name like 'F55%'

or t1.table_name like 'F56%'

or t1.table_name like 'F57%'

or t1.table_name like 'F58%'

or t1.table_name like 'F59%')

and column_name like '$%';

This creates a bunch of:

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OUB06" TO ZOUB06;

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OSRP2" TO ZOSRP2;

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OSRP3" TO ZOSRP3;

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OSRP4" TO ZOSRP4;

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OSRP5" TO ZOSRP5;

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OSRP6" TO ZOSRP6;

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OSRP7" TO ZOSRP7;

ALTER TABLE CRPDTA.F554108 RENAME COLUMN "$OSRP8" TO ZOSRP8;

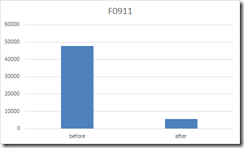

In summary I can now use UTB or databrowser on any one of the 110 custom world tables. Sure some of the custom DD items have rubbish descriptions, this is cosmetic and could be fixed. The data is there and queryable in E1. This table definitions can be promoted from DV and included in package builds as valid objects.