This is basically a

cut and paste of the release notes for 9.2.5 from learnjde.com

- a great resource. I'll put a small amount of commentary around

this.

Firstly, please

plan to be on 64bit. This does not have to be a massive project.

You'll just need to ensure that your 3rd party products (if you load them

through JDE) can run in a 64bit environment. I recall that there are some

output management and AP workflow tools that might struggle with 64bit.

Please do not expect dramatic performance improvements, as there will not be

any. Please do not expect your kernels or UBE's to use more than 4GB of

ram each (if they are, I think you might have other issues...). This is a

good move for security and compliance reasons.

From the horses

mouth:

Beginning with EnterpriseOne Tools

Release 9.2.5, JD Edwards is transitioning the Tools Foundation compatibility

for 32-bit into the Sustaining Support lifecycle phase:

- ·

With

JD Edwards EnterpriseOne Tools 9.2 Update 5 (9.2.5), Oracle will cease delivery

of a 32-bit JD Edwards Tools Foundation for Oracle Solaris and HP-UX.

- ·

JD

Edwards EnterpriseOne Tools 9.2 Update 5 (9.2.5) will be the final Tools

Foundation release to be delivered as a 32-bit compiled foundation for Oracle

Linux, Red Hat Enterprise Linux, Microsoft Windows, IBM i on Power Systems, and

IBM AIX.

Following is a list

of all the enhancements. Guess what? 75% of them are for

orchestrations, this is also very nice. If you are not using

orchestrations. If a developer suggests to you that you should implement

a flat file based integration solution - fire them and use

orchestrations. You know that an orch and SFTP, can upload CSV can call a

UBE if required - can do everything that old school development can do - but

it's done on the glass and does not need package builds. Start using it!

I cannot use the

title Digital Transformation, so I’ve changed it to Platform Modernisation. Hopefully I can drill down on some of these

enhancements when the time is right.

Platform

Modernisation

Assertion

Framework for Orchestrations

Orchestrations are

a powerful way to automate EnterpriseOne transactions and integrate to

third-party systems and IoT devices. The integrity of an orchestration has two

critical aspects: first, it runs without error, and second, it produces the

data that the designer expects. The Assertion Framework enables the

orchestration designer to specify, in other words, to "assert" the

values that are expected to be produced by an orchestration. For example, the

designer might assert that a value is expected to be within a certain range, or

even match a specific numeric value. If the orchestration produces a value

outside that range, the details of the failed assertion are displayed for

investigation. For customers who are considering the use of orchestrations as test

cases, the Assertion Framework provides a way to define objective success

criteria.

Enhanced

Configuration Between Enterprise Servers and AIS Server

This feature

provides the system administrator with more control over configuring the

associations between EnterpriseOne enterprise servers and Application Interface

Services (AIS) servers. Customers who deploy a single enterprise server for all

their environments can now associate that enterprise server with AIS servers in

multiple environments. This configurability facilitates the segregation of AIS

servers across environments, such as development, test, and production, while

making it possible for a single enterprise server to serve all the

environments.

Allow Variables

in REST File Uploads

As part of its

ability to invoke third-party services through its REST connector, Orchestrator

can also upload various content types, for example, EnterpriseOne media objects

and files to a REST-enabled content management system. This enhancement enables

the orchestration designer to use variables in the definition of the connector.

For example, the name of a file might be represented by a variable, enabling

the orchestration to dynamically determine the file to upload, thereby

expanding the flexibility of this feature.

Configurable AIS

Session Initialization

This feature

provides you more choice and control over how system resources are used to

initialize user sessions. The Application Interface Services (AIS) server is a

powerful framework for exposing EnterpriseOne applications and data as

services. In addition to being available to external clients, the AIS server

has also been used as part of the internal EnterpriseOne architecture to enable

certain functionalities, such as UX One components, EnterpriseOne Search, and

form extensions.For users who use these features, the system will establish

sessions with both the EnterpriseOne HTML server and the EnterpriseOne AIS

server, and each session consumes system resources. For users who do not use

these features, the system will not establish an AIS session. This enhancement

enables the system administrator to configure user sessions to avoid

initializing an AIS session, thus conserving system resources.

Extend

EnterpriseOne User Session to Externally Hosted Web Applications

The UX One

framework offers EnterpriseOne users a converged, flexible, and configurable

user interface for all their enterprise applications. Even web-based

third-party applications can be configured into EnterpriseOne pages and

external forms. This enhancement further improves the user experience by

enabling EnterpriseOne and third-party applications to share certain data, such

as session information, to provide a more integrated user experience and ensure

efficient use of shared resources.

Optimized

Retrieval of Large Data Sets by Orchestrator

Of all the

capabilities of Orchestrator, retrieving data from EnterpriseOne tables or

applications is among the most common and essential. Some usage patterns entail

retrieving data in very small transactional "bursts," for example, to

get an inventory count of a single item. Other usage patterns entail retrieving

very large data sets, for example, to load or synchronize a complete customer

list from EnterpriseOne to a third-party system. This enhancement provides

performance optimizations for Orchestrator to be able to retrieve very large

data sets—possibly thousands of rows—from EnterpriseOne tables and pass the

results in the orchestration output. The orchestration designer may also have

the output written to disk and exclude it from the orchestration response to

prevent the response from becoming very large.

User Experience

Form Extensibility Improvements

With Tools Release 9.2.5, Form

Extensibility has been enhanced with the ability to unhide the business view

columns on a grid that have been hidden using Form Design Aid (FDA). This

enhancement enables users to use a form extension to unhide those business view

columns and add them into a grid without creating a customized form in FDA.

Similar to an existing feature in a

personalized form, users can now mark a field as required in a form extension,

and this setting will apply to all the versions of the application.

These features significantly reduce the

time, effort, and cost required to give end users a streamlined user

experience.

Learn more about Form Extensions on the Extensibility page,

and other User Defined Objects on the User Defined

Objects (UDOs) page on LearnJDE.

Enhanced Search Criteria and Actions for Enterprise Search

JD Edwards EnterpriseOne Search helps

users quickly find and act on JD Edwards transactions and data as part of their

daily activities. To further increase user productivity, EnterpriseOne Search

has been enhanced with the following capabilities:

Exact match search: The system retrieves search results that

are an exact match to the keyword that a user enters. For example, if a user

wants to search for all the sales orders for red bikes and enters “red bike” as

the keyword, the system will display only the orders that contain “red bike” in

the description. This capability helps in narrowing down search results,

thereby improving user productivity.

Ability to export the search results: Users can export the

search results to a .csv file so that they can analyze the data or import the

data to a different application outside of EnterpriseOne.

Ability to specify query and personal form for related

action: When a Related Action is defined to execute a JD Edwards application,

the search designer can now enter a specific query and specify a personal form

for the application. Simplification of the application interface and input of

associated data improves the user experience.

Improved Help

The

application-level Help option on JD Edwards EnterpriseOne forms has been

enhanced to enable users to search separately for EnterpriseOne documentation

and UPK documentation. This improvement enables users to easily locate specific

information that addresses their questions. In addition, JD Edwards now

provides direct access to www.LearnJDE.com, the JD Edwards resource library,

from the user drop-down menu in the EnterpriseOne menu bar. This improvement

provides users quick access to the current collateral and education resources

across all areas of JD Edwards.

System

Automation

Virtual Batch

Queues

JD Edwards batch

processes (UBE and reports) continue to be critical for customers’ business

processes. With Tools Release 9.2.5, JD Edwards has improved the scalability,

flexibility, and availability of JD Edwards UBE batches with Virtual Batch

Queues (VBQ). This enhancement reduces the dependency on queues per individual

server and gives users the flexibility to run and rerun jobs on a group of congruent

batch servers. It further enables users to maximize the system resources by

having their UBEs processed by any available server from the batch cluster

dynamically. The centralized repository for output ensures easy accessibility

to report output no matter when or where the batch was submitted and the output

was retrieved. Overall, this feature provides high availability for batch

processes, expedites the processing of batch jobs, and helps users achieve

scale to ensure performance of batch jobs.

Development Client

Simplification

While JD Edwards

has delivered frameworks to reduce the need for customizations, there are still

some scenarios where customers need to create and maintain customizations. In

Tools 9.2.5, JD Edwards has greatly reduced the time, effort, and resources

required to install and maintain the development client by removing the

requirement for the local database. The removal of the local database reduces

storage and memory requirements for a development client, resulting in faster

EnterpriseOne client installations. This feature also streamlines Object

Management Workbench (OMW) activities, Save/Restore operations, and eliminates

the need for a mandatory check-in for objects during package deployment. This

enhancement results in improved productivity compared to the previous package

installation process. The underlying package build and deployment processes are

also streamlined, improving the throughput of the software development life

cycle.

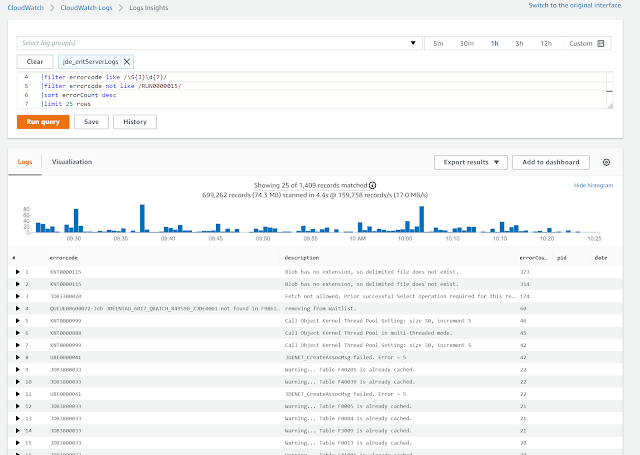

Automated

Troubleshooting for Kernel Failures

Tools 9.2.5

expedites the troubleshooting process for kernel failures by automatically

identifying the kernel failures, capturing the log files along with the problem

call stack, and sending an email notification. This automated process

identifies the cause for the kernel failure and enables the administrator to

configure whom to notify (the corresponding team) so that corrective action is

performed promptly. The notification contains all the contextual information,

which helps to streamline the time spent in resolving issues and working with

Oracle Support.

Web-Enabled Object

Management Workbench (OMW)

Tools Release 9.2.5

enhances the web version of OMW to support the management of development

objects: applications, UBEs, business functions, and all other development

objects. Developers, testers, and administrators can now transfer objects

through a browser-based application within the EnterpriseOne web user

interface. This feature eliminates the need to access the development client

for object transfer, streamlines the life cycle of the OMW projects and

objects, and generates the potential for remote and automated processing of OMW

projects and objects.

Web-Based Package

Build and Deployment

With Tools Release

9.2.5, the key system administration applications for package assembly, package

build, and package deployment can be run through the EnterpriseOne web

interface. These functions were previously available only through the

development client. This enhancement creates opportunities for automating and

scheduling package builds and sending the related notifications through

orchestrations, further extending the digital platform for JD Edwards system

administration tasks.

Security

Continuous

Enhancements for a Secure Technology Stack

To ensure security

compliance and eliminate vulnerabilities around JD Edwards EnterpriseOne

deployments, there is a continuous need to enhance the product security and

uplift the components to the latest versions. This Tools release includes the

following security enhancement:

Support for long

and complex database passwords

Automated TLS

Configuration Between Server Manager Console and Agents

This feature

simplifies and automates the configuration of Transport Layer Security (TLS)-

based communications between the Server Manager console and the Server Manager

agents. The Secure Sockets Layer (SSL) provides secure communication between

the applications across a network by enabling message encryption, data

integrity, and authentication; therefore, it is imperative to keep this

component updated and configured to your JD Edwards servers. This feature

eliminates the need to run multiple platform-specific manual commands for

importing the certificate files into Java (keystore and truststore) files,

which can be a complicated and error-prone process. For secure JMX-based

communication, both TLS v1.3 (for Oracle WebLogic Server with Java 1.8 update

261 or higher) and TLS v1.2 (for Oracle WebLogic Server and IBM WebSphere

Application Server) versions of the TLS protocol are supported.

Open Platforms

Support for 64-bit

JD Edwards on UNIX Platforms

To ensure that

customers are running their business-critical EnterpriseOne system on a stable,

supportable infrastructure, and to enable them to leverage the capabilities of

the latest and emerging cloud services, hardware platforms, and supporting

technologies, EnterpriseOne deployments must remain compliant with currently

available platform stacks. Beginning with EnterpriseOne Tools Release 9.2.5, JD

Edwards announces support for running the EnterpriseOne Tools foundation in

full 64-bit mode on the below UNIX platforms:

· Oracle Solaris on SPARC

· IBM AIX on POWER Systems

· HP-UX Itanium

· The below platforms already support 64-bit

JD Edwards:

· Oracle Linux

· Microsoft Windows Server

· IBM i on POWER Systems

· Red Hat Enterprise Linux

With EnterpriseOne

Tools Release 9.2.5, JD Edwards also announces the withdrawal of support for

running EnterpriseOne in 32-bit mode on the below platforms:

· Oracle Solaris on SPARC

· HP-UX Itanium

· Platform Certifications

JD Edwards

EnterpriseOne deployments depend on a matrix of interdependent platform

components from Oracle and third-party vendors. The product support life cycle

of these components is driven by their vendors, creating a continuous need to

certify the latest versions of these products to give customers a complete

technology stack that is functional, well-performing, and supported by the

vendors. This Tools release includes the following platform certifications:

· Oracle Database 19c:

· IBM AIX on POWER Systems (64-bit JD Edwards)

· HP-UX Itanium (64-bit JD Edwards)

· Oracle Solaris on SPARC (64-bit JD Edwards)

· Local database for deployment server

· Oracle Linux 8

· Oracle SOA Suite 12.2.1.4

· Microsoft Windows Server 2019 support for

deployment server and Development Client

· Microsoft Edge Chromium Browser 85

· IBM i 7.4 on POWER Systems

· IBM MQ Version 9.1

· Red Hat Enterprise Linux 8

· Mozilla Firefox 78 ESR

· Google Chrome 85

Support for the

below platforms are withdrawn with Tools Release 9.2.5:

· Oracle Enterprise Manager 12.1

· Microsoft Windows Server 2012 R2

· Microsoft SQL Server 2014

· Microsoft Edge Browser 42 and 44

· Apple iOS 11

JD Edwards

EnterpriseOne certifications are posted on the Certifications tab in My Oracle Support.

The updated version

of JD Edwards EnterpriseOne Platform Statement of Direction is published on My

Oracle Support (Document ID 749393.1). See this document for a summary of

recent and planned certifications as well as important information about

withdrawn certifications.