Aggregate data requests can be a little fiddly, but here we go. I find that the best way to test these is to have a simple orchestration, a simple data request and use the orchestrator client to keep running the queries until you get it right.

I’ve very confident to say that if we are updating a data request service request, I know that as soon as you save in the orchestration studio, you can run that change immediately in orchestration client.

I’m going to show 3 scenarios with 3 different outputs.

Scenario 1

Simple single return of a users total value of PO’s that need approval: No aggregation.

This when tested:

Will return all orders and amounts, ordered by amount desc for the user# that I pass in.

As you can see from the above, I have my summary. Note that this is a record set, but not and aggregation.

Scenario 2:

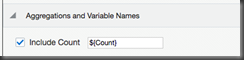

This is slightly more complex, as I’m using aggregation in the query. You can see that I’m including the “generic count”

And the sum of the amount

This results in

Note that this is a single row response because I’m using “assigned to” in the where clause. This is using aggregation and also using sum. A nice query – notice how there is no record set because of the where clause being a single response. This is ALSO the case because I’ve selected:

This is very important. If you include count as above, you must formulate your query to only respond with a single row back – trick for beginners.

Scenario 3:

This is the grizzly bear. I want a result set, which summarises all people who have outstanding PO’s. I want to know the value and the count of the outstanding PO’s too. I want to only see those with a value greater than 0.

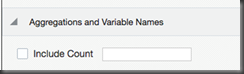

The above screen shows all of these elements (do not include count)

This will prevent it from working.

The elements of this are that there is a where clause, as I do not really want one – but am forced, I’ll say where AN8 > 1! I want the sum and count of orders grouped by the person responsible. I also order by order amount desc. I could order by the count of distinct orders too.

Everything else will work as designed, here is the return

Problem:

Aggregation with group by is not a return set.

Note that I want to send an email for each result in the returnset, but I think when you use aggregates, there is only a single return set… Doh!