AS400 data conversion problems are killing me at the moment. Well not literally, but they are very annoying. If EBCIDIC was more like ASCII, I think that many of my problems would go away.

I have a site that is moving from AS/400 to Oracle, I have no idea how some of their data has made it into the base AS400 tables, but it's there. There are a lot of crazy characters (tab, 'ç¢éDz, etc) in the tables. So when I do a copy to oracle I get the old ORA-12899. This is quite a pain, it only occurs when the field on the 400 is full (when there is 30 chars) and one of them is a special char. It seems that the single byte ascii code does not exist for the char, so when it gents banged into a CHAR field in oracle it spills into two characters.

When you do a length(field) of the column, you see 31, yet when you do a length(field) you get 30. So it takes more bytes to represent the string. That is a pain! of course I've tried various combinations of setting blank trimming, but alas none of this works for my situation.

SQL> sho parameter blank_trimming ;

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

blank_trimming boolean TRUE

Now, I'm thinking that there might be something with the CHARACTERSET values, but then again... I'm moving to unicode eventually - I'm not there yet.

SELECT value$ FROM sys.props$ WHERE name = 'NLS_CHARACTERSET' ;

SELECT value$ FROM sys.props$ WHERE name = 'NLS_NCHAR_CHARACTERSET' ;

AL32UTF8 *this is not supported

UTF8 *this is not support

Great! Thanks for the top database provisioning! This is my problem, but I'm looking at a different solution that complete database rebuild.

I appreciate that I should have UTF16 (or WTF16!) for the NLS_CHARACTERSET. This would probably sort everything out, and why you ask?

AL32UTF8 is a varying width characterset, which means that the code for a character can be 1, 2, 3, or 4 bytes long.

So, I have some of my characters that are being represented by multiple bytes. What can I do...

This is a temporary problem for me, as I want to convert to unicode asap, so I don't really feel the need for changing the NLS_CHARACTERSET.

I'm going to cleanse the data in oracle - how might you ask? Easy, I tell you... but it's quite a bit of work.

Firsty, I used R98403 to copy all my data from AS/400 to oracle. I was then able to read the reports or reconcile the row counts and know which tables had issues with ORA-12899 - about 12.

F0006 for example, this is how to cleanse out the crappy characters:

--segregate the problem data only

create table TESTDTA.F0006broke as (

select *

from crpdta.f0006@cms t1

WHERE not exists

(select 1

from testdta.f0006 t2

where t1.mcmcu = t2.mcmcu

));

--find what is wrong with the data

insert into testdta.f0006 select * from testdta.f0006broke;

--review data

select lengthb(mcdl01), length(mcdl01), translate(mcdl01,'ç¢éDz'||chr(5)||chr(13)||chr(10), 'cceC2 '),

lengthb(translate(mcdl01,'ç¢éDz'||chr(5)||chr(13)||chr(10), 'cceC2 ')) FROM testdta.f0006broke ;

--fix data

update testdta.f0006broke set mcdl01 = translate(mcdl01,'ç¢éDz'||chr(5)||chr(13)||chr(10), 'cceC2 ') ;

commit;

--insert data

insert into testdta.f0006 select * from testdta.f0006broke;

commit ;

that was not too hard now was it?

You will also note that the NCHAR_CHARACTERCODESET is also wrong, it must be The EnterpriseOne Unicode data must be stored in UTF16, not UTF8.

Doh, might be back to the drawing board for this one!

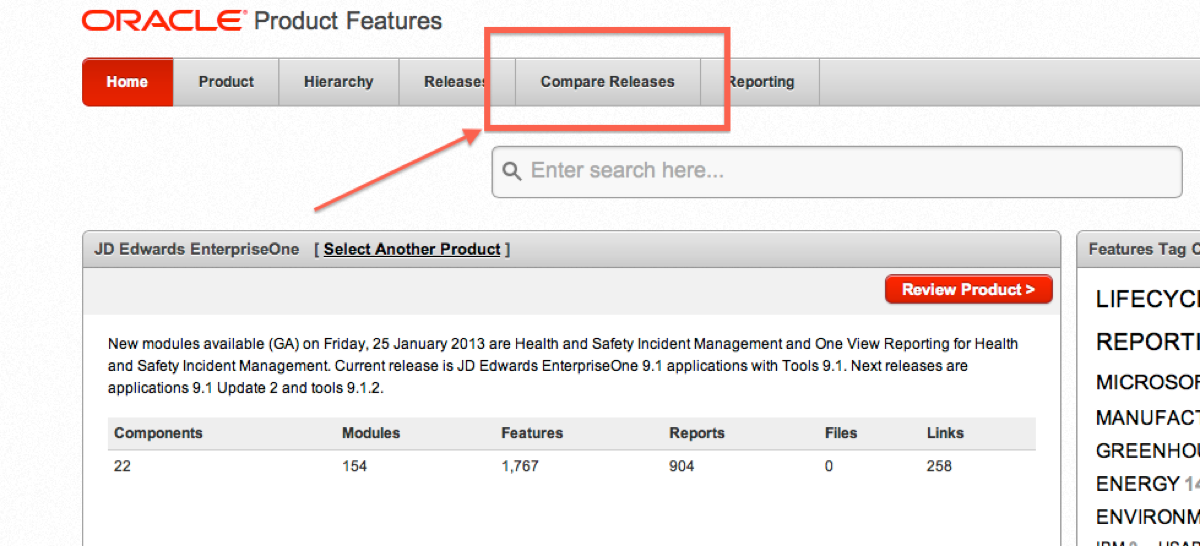

Oracle Product Features (eUVP) -Detailed net change and statement of direction information between all JD Edwards releases (past, present and planned). Replaces paper Upgrade Value Proposition

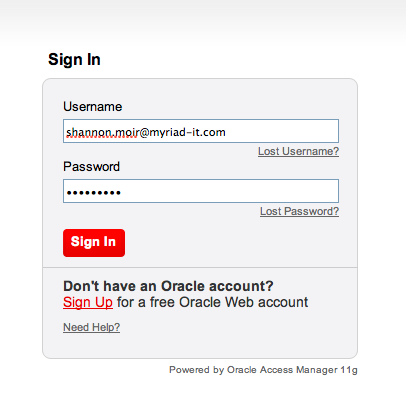

3. Log into MOS

Oracle Product Features (eUVP) -Detailed net change and statement of direction information between all JD Edwards releases (past, present and planned). Replaces paper Upgrade Value Proposition

3. Log into MOS

4. Accept disclaimer

5. Choose JD Edwards EnterpriseOne https://apex.oracle.com/pls/apex/f?p=24153:100:14242335010598:SET_PRODUCT:::PRODUCT_ID,PRODUCT_NAME:208136276869697198410960926144257606832,JD%20Edwards%20EnterpriseOne

6.

4. Accept disclaimer

5. Choose JD Edwards EnterpriseOne https://apex.oracle.com/pls/apex/f?p=24153:100:14242335010598:SET_PRODUCT:::PRODUCT_ID,PRODUCT_NAME:208136276869697198410960926144257606832,JD%20Edwards%20EnterpriseOne

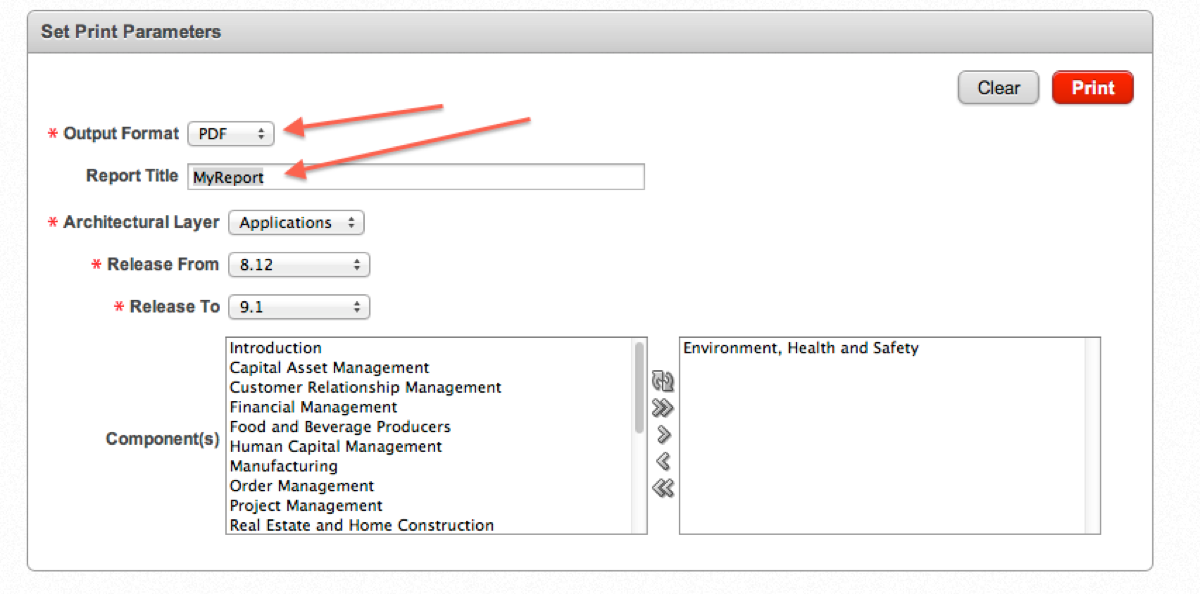

6.  7. Choose your from and to release and also what modules you want to report on.

7. Choose your from and to release and also what modules you want to report on.

8. Choose search

9. Select run report

8. Choose search

9. Select run report

Remember to choose your format, XML, XLS, words, pdf etc

11. Print to download

Remember to choose your format, XML, XLS, words, pdf etc

11. Print to download